I have asked this question as part of the Efficient way to plot data on an irregular grid question, but the general feedback was to split the original question in more manageable chunks. Hence, this new question.

I work with satellite data organized on an irregular two-dimensional grid whose dimensions are scanline (along track dimension, i.e. Y axis) and ground pixel (across track dimension, i.e. X axis). Latitude and longitude information for each centre pixel are stored in auxiliary coordinate variables, as well as the four corners coordinate pairs (latitude and longitude coordinates are given on the WGS84 reference ellipsoid).

Let's build a toy data set, consisting in a 12x10 potentially irregular grid and associated surface temperature measurements.

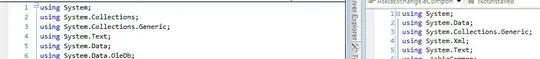

library(pracma) # for the meshgrid function

library(ggplot2)

num_sl <- 12 # number of scanlines

num_gp <- 10 # number of ground pixels

l <- meshgrid(seq(from=-20, to=20, length.out = num_gp),

seq(from=30, to=60, length.out = num_sl))

lon <- l[[1]] + l[[2]]/10

lat <- l[[2]] + l[[1]]/10

data <- matrix(seq(from = 30, to = 0, length.out = num_sl*num_gp),

byrow = TRUE, nrow = num_sl, ncol = num_gp) +

matrix(runif(num_gp*num_sl)*6, nrow = num_sl, ncol = num_gp)

df <- data.frame(lat=as.vector(lat), lon=as.vector(lon), temp=as.vector(data))

The lon and lat data contain the centre pixel coordinates as provided in the original product I'm working with, stored as two-dimensional matrix, whose axes are ground_pixel (X axis) and scanline (Y axis). The data matrix—same dimensions—contains my measurements. I then flatten the three matrix and store them in a data frame.

I would like to plot the ground pixels (as quadrilaterals) on a map, filled accordingly with the temperature measurement.

Using tiles I get:

ggplot(df, aes(y=lat, x=lon, fill=temp)) +

geom_tile(width=2, height=2) +

geom_point(size=.1) +

borders('world', colour='gray50', size=.2) +

coord_quickmap(xlim=range(lon), ylim=range(lat)) +

scale_fill_distiller(palette='Spectral') +

theme_minimal()

But that's not what I am after. I could play with width and height to make the tiles "touch" each other, but of course that wouldn't even come close to my desired goal, which is to plot the actual projected ground pixels on the map.

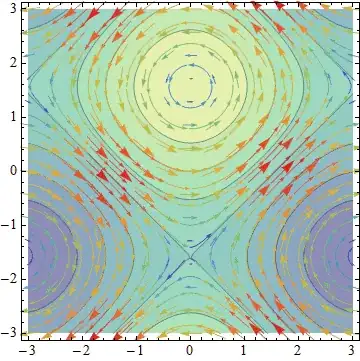

Python's xarray can, for example, automatically infer the pixel boundaries given the pixel centre coordinates:

Question

Is there a way to achieve the same results in R, that is: having the pixel boundaries automatically inferred from the pixel centres, and plotting the pixels as filled polygons on a map? Maybe using the sf package?

I can see it done in the answer to this question but the answer that refers to using sf is a bit unclear to me, as it deals with different projections and potentially regular grids, whereas in my case I suppose I don't have to re-project my data, and, furthermore, my data is not on a regular grid.

If this is not possible, I suppose I can resort to use the pixel boundaries information in my products, but maybe that's a topic for another question should this one prove to be not easy to tackle.