I'm looking for a faster approach to improve the performance of my solution for the following problem: a certain DataFrame has two columns with a few NaN values in them. The challenge is to replace these NaNs with values from a secondary DataFrame.

Below I'll share the data and code used to implement my approach. Let me explain the scenario: merged_df is the original DataFrame with a few columns and some of them have rows with NaN values:

As you can see from the image above, columns day_of_week and holiday_flg are of particular interest. I would like to fill the NaN values of these columns by looking into a second DataFrame called date_info_df, which looks like this:

By using the values from column visit_date in merged_df it is possible to search the second DataFrame on calendar_date and find equivalent matches. This method allows to get the values for day_of_week and holiday_flg from the second DataFrame.

The end result for this exercise is a DataFrame that looks like this:

You'll notice the approach I'm using relies on apply() to execute a custom function on every row of merged_df:

- For every row, search for NaN values in

day_of_weekandholiday_flg; - When a NaN is found on any or both of these columns, use the date available in from that row's

visit_dateto find an equivalent match in the second DataFrame, specifically thedate_info_df['calendar_date']column; - After a successful match, the value from

date_info_df['day_of_week']must be copied intomerged_df['day_of_week']and the value fromdate_info_df['holiday_flg']must also be copied intodate_info_df['holiday_flg'].

Here is a working source code:

import math

import pandas as pd

import numpy as np

from IPython.display import display

### Data for df

data = { 'air_store_id': [ 'air_a1', 'air_a2', 'air_a3', 'air_a4' ],

'area_name': [ 'Tokyo', np.nan, np.nan, np.nan ],

'genre_name': [ 'Japanese', np.nan, np.nan, np.nan ],

'hpg_store_id': [ 'hpg_h1', np.nan, np.nan, np.nan ],

'latitude': [ 1234, np.nan, np.nan, np.nan ],

'longitude': [ 5678, np.nan, np.nan, np.nan ],

'reserve_datetime': [ '2017-04-22 11:00:00', np.nan, np.nan, np.nan ],

'reserve_visitors': [ 25, 35, 45, np.nan ],

'visit_datetime': [ '2017-05-23 12:00:00', np.nan, np.nan, np.nan ],

'visit_date': [ '2017-05-23' , '2017-05-24', '2017-05-25', '2017-05-27' ],

'day_of_week': [ 'Tuesday', 'Wednesday', np.nan, np.nan ],

'holiday_flg': [ 0, np.nan, np.nan, np.nan ]

}

merged_df = pd.DataFrame(data)

display(merged_df)

### Data for date_info_df

data = { 'calendar_date': [ '2017-05-23', '2017-05-24', '2017-05-25', '2017-05-26', '2017-05-27', '2017-05-28' ],

'day_of_week': [ 'Tuesday', 'Wednesday', 'Thursday', 'Friday', 'Saturday', 'Sunday' ],

'holiday_flg': [ 0, 0, 0, 0, 1, 1 ]

}

date_info_df = pd.DataFrame(data)

date_info_df['calendar_date'] = pd.to_datetime(date_info_df['calendar_date'])

display(date_info_df)

# Fix the NaN values in day_of_week and holiday_flg by inspecting data from another dataframe (date_info_df)

def fix_weekday_and_holiday(row):

weekday = row['day_of_week']

holiday = row['holiday_flg']

# search dataframe date_info_df for the appropriate value when weekday is NaN

if (type(weekday) == float and math.isnan(weekday)):

search_date = row['visit_date']

#print(' --> weekday search_date=', search_date, 'type=', type(search_date))

indexes = date_info_df.index[date_info_df['calendar_date'] == search_date].tolist()

idx = indexes[0]

weekday = date_info_df.at[idx,'day_of_week']

#print(' --> weekday search_date=', search_date, 'is', weekday)

row['day_of_week'] = weekday

# search dataframe date_info_df for the appropriate value when holiday is NaN

if (type(holiday) == float and math.isnan(holiday)):

search_date = row['visit_date']

#print(' --> holiday search_date=', search_date, 'type=', type(search_date))

indexes = date_info_df.index[date_info_df['calendar_date'] == search_date].tolist()

idx = indexes[0]

holiday = date_info_df.at[idx,'holiday_flg']

#print(' --> holiday search_date=', search_date, 'is', holiday)

row['holiday_flg'] = int(holiday)

return row

# send every row to fix_day_of_week

merged_df = merged_df.apply(fix_weekday_and_holiday, axis=1)

# Convert data from float to int (to remove decimal places)

merged_df['holiday_flg'] = merged_df['holiday_flg'].astype(int)

display(merged_df)

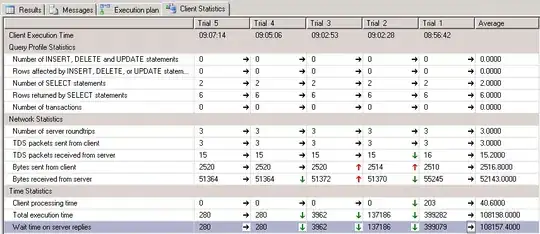

I did a few measurements so you can understand the struggle:

- On a DataFrame with 6 rows,

apply()takes 3.01 ms; - On a DataFrame with ~250000 rows,

apply()takes 2min 51s. - On a DataFrame with ~1215000 rows,

apply()takes 4min 2s.

How do I improve the performance of this task?