This is the process I go through to render the scene:

- Bind MSAA x4 GBuffer (4 Color Attachments, Position, Normal, Color and Unlit Color (skybox only. I also have a Depth component/Texture).

- Draw SkyBox

- Draw Geo

- Blit all Color and Depth Components to a Single Sample FBO

- Apply Lighting (I use the depth texture to check if it should be lit by checking if depth texture value is less than 1).

- Render Quad

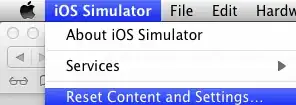

And this is what is happening:

As you can see I get these white and black artefacts around the edge instead of smooth edge. (Good to note that if I remove the lighting and just render the texture without lighting, I don't get this and it smooths correctly).

Here is my shader (it has SSAO implemented but that seem to not effect this).

#version 410 core

in vec2 Texcoord;

out vec4 outColor;

uniform sampler2D texFramebuffer;

uniform sampler2D ssaoTex;

uniform sampler2D gPosition;

uniform sampler2D gNormal;

uniform sampler2D gAlbedo;

uniform sampler2D gAlbedoUnlit;

uniform sampler2D gDepth;

uniform mat4 View;

struct Light {

vec3 Pos;

vec3 Color;

float Linear;

float Quadratic;

float Radius;

};

const int MAX_LIGHTS = 32;

uniform Light lights[MAX_LIGHTS];

uniform vec3 viewPos;

uniform bool SSAO;

void main()

{

vec3 color = texture(gAlbedo, Texcoord).rgb;

vec3 colorUnlit = texture(gAlbedoUnlit, Texcoord).rgb;

vec3 pos = texture(gPosition, Texcoord).rgb;

vec3 norm = normalize(texture( gNormal, Texcoord)).rgb;

vec3 depth = texture(gDepth, Texcoord).rgb;

float ssaoValue = texture(ssaoTex, Texcoord).r;

// then calculate lighting as usual

vec3 lighting;

if(SSAO)

{

lighting = vec3(0.3 * color.rgb * ssaoValue); // hard-coded ambient component

}

else

{

lighting = vec3(0.3 * color.rgb); // hard-coded ambient component

}

vec3 posWorld = pos.rgb;

vec3 viewDir = normalize(viewPos - posWorld);

for(int i = 0; i < MAX_LIGHTS; ++i)

{

vec4 lightPos = View * vec4(lights[i].Pos,1.0);

vec3 normLight = normalize(lightPos.xyz);

float distance = length(lightPos.xyz - posWorld);

if(distance < lights[i].Radius)

{

// diffuse

vec3 lightDir = normalize(lightPos.xyz - posWorld);

vec3 diffuse = max(dot(norm.rgb, lightDir), 0.0) * color.rgb *

lights[i].Color;

float attenuation = 1.0 / (1.0 + lights[i].Linear * distance + lights[i].Quadratic * distance * distance);

lighting += (diffuse*attenuation);

}

}

if(depth.r >= 1)

{

outColor = vec4(colorUnlit, 1.0);

}

else

{

outColor = vec4(lighting, 1.0);

}

}

So the last if statement checks if it is in the depth texture, if it is then apply lighting, if it is not then just draw the skybox (this is so lighting is not applied to the skybox).

I have spent a few days trying to work this out, changing ways of checking if it should be light by comparing normals, position and depth, changing the formats to a higher res (e.g. using RGB16F instead of RGB8 etc.) but I can't figure out what is causing it and doing lighting per sample (using texel fetch) would be way to intensive.

Any Ideas?