I have already asked this question months earlier but I will ask again to be 100% sure and I will describe my issue here :

I have a streaming topic that I'm aggregating every minute with a sliding window in Spark Structured Streaming.

For example, with a window of 30 minutes sliding every minute, we can say that I'm already doing some kind of moving statistics. I'm not only computing on every minute independantly but taking into account the last 29 minutes too, and from here moving my aggregating window every minute.

But is there a way to compute in real-time with Spark Structured Streaming :

Change over time :

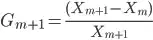

Rate of change :

Growth / Decay :

For example every minute, I compute an average which would be  . With these formulas, I would be able to compare the evolution of my average every minute in my sliding window :

. With these formulas, I would be able to compare the evolution of my average every minute in my sliding window :

+------------+-----------+

| Window | Average |

+------------+-----------+

|12:30-13:00 | 100 |

|12:31-13:01 | 103 |

|12:32-13:02 | 106 |

|12:33-13:03 | 111 |

+------------+-----------+

For this example, I would want to add a new feature which would be the growth/decay of my average but in real time. At instant t, I want to be able to compute the growth of my average compared to my average at instant t-1.

Thanks for your insights for my issue,

Have a good day.

PS : I found these two posts which could be interesting, maybe people in this community have a way to do this now :