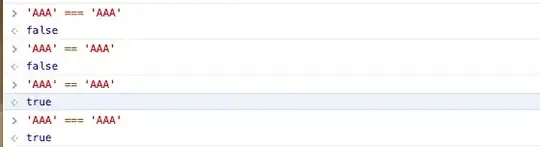

I know Memory Error related questions have been asked before, for example here, here, here, here, or here. And the suggested solutions always are to switch to Python 3 and/or to Window 64bit, or in case of faulty code, to fix the code. However, I am already on Python 3 and Win 64. I also can see from windows task manager that I have several GB of my 64GB of RAM still available when Python throw the Memory Error.

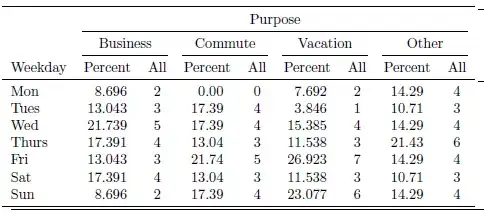

I have about 15 date-indexed, pandas data frames each with 14000 rows and on average 5000 columns of float data, and about 40-50% NaN values, that I read in from the hard drive. I can not simply drop NaNs because different columns have NaNs at different dates. Memory Error happens when I try to concatenate them with pd.concat(). So it's not a matter of some faulty code or while loop. If I leave some of the data frames out of concatenation, Memory Error does not happen in concatenation, but then when I try to do a Scikit learn decision tree analysis on the concatenated data, it happens.

My question is how can I get Python to use all the available memory and not throw Memory Error?

Edit: screenshots added

IPython interpreter screenshot (I don't have Python 2 even installed):