I have implemented a simple linear search and shown the results on the graph with StdDraw library. I have ran the search for a randomly generated number on tables of sizes from 1000 to 100000 elements, incrementing the size by 1 each time. The points on the graph represent the average time it took to find a random number in the given table, approximated in 1000 runs on the same table size.

However there are big deviations visible on the graph which I do not know how to explain. Is it possible that this is due to the interference of other background tasks requesting CPU processing? Could it be that the spikes are because of poorly generated pseudorandom integers, because the nextInt() method is called in a really tiny time slice resulting in similar (very big or very low) random integers?

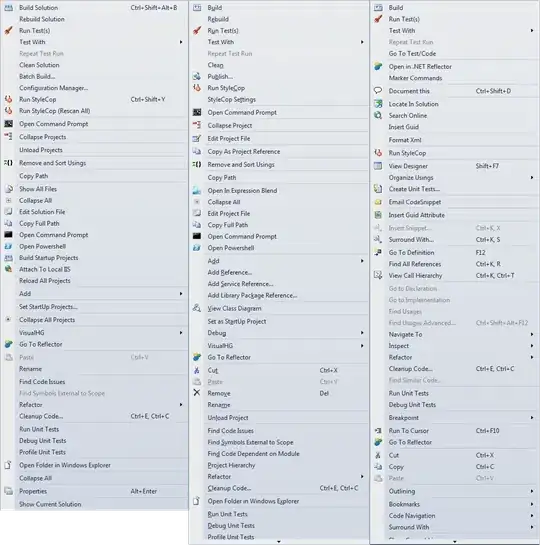

(The red line represents the linear search and the blue one binary search. Ignore the latter)