How does parallelization work using JDBC?

Here is my code:

spark = SparkSession.builder.getOrCreate()

DF = spark.read.jdbc( url = ...,

table = '...',

column = 'XXXX',

lowerBound = Z,

upperBound = Y,

numPartitions = K

)

I would like to know how are related the following parameters and if there is a way to choose them properly:

column-> it should be the column chosen for the partition

( Does it need to be a numeric column? )lowerBound-> is there a rule of thumb to choose it?upperBound-> is there a rule of thumb to choose it?numPartitions-> is there a rule of thumb to choose it?

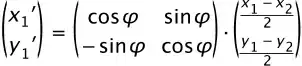

I understood that

stride = ( upperBound / numPartitions ) - ( lowerBound / numPartitions )

Are there many "strides" in each partition?

In other words, are the partitions filled with a bunch of strides until all the observations has finished?

Please, look at this picture

to get the sense of the question, considering the following parameters:

to get the sense of the question, considering the following parameters:

lowerBound 80.000

upperBound 180.000

numPartitions 8

Stride 12.500

Notice that:

min('XXXX') = 0

max('XXXX') = 350.000

('XXXX').count() = 500.000.000

P.S. I read the documentation and this answer, but I didn't understand it very well.