Several problems with the way you adapted that example:

- the worker thread locks the io_service with the

work instance so it will never complete

- you

usleep some time before spawning the async tasks, but you never run any of the tasks in the first place until the loop has completed... This means that all the delays are done before starting any work.

Here's my suggestion:

- run the service before starting the async tasks

- have 1

work instance lock the service in case the service would become idle before posting the next http request

- don't lock

work inside the worker thread

Live On Coliru

#include "example/common/root_certificates.hpp"

#include <boost/beast.hpp>

#include <boost/asio.hpp>

using tcp = boost::asio::ip::tcp; // from <boost/asio/ip/tcp.hpp>

namespace ssl = boost::asio::ssl; // from <boost/asio/ssl.hpp>

namespace http = boost::beast::http; // from <boost/beast/http.hpp>

//------------------------------------------------------------------------------

// Report a failure

void

fail(boost::system::error_code ec, char const* what)

{

std::cerr << what << ": " << ec.message() << "\n";

}

// Performs an HTTP GET and prints the response

class session : public std::enable_shared_from_this<session>

{

tcp::resolver resolver_;

ssl::stream<tcp::socket> stream_;

boost::beast::flat_buffer buffer_; // (Must persist between reads)

http::request<http::empty_body> req_;

http::response<http::string_body> res_;

public:

// Resolver and stream require an io_context

explicit

session(boost::asio::io_context& ioc, ssl::context& ctx)

: resolver_(ioc)

, stream_(ioc, ctx)

{

}

// Start the asynchronous operation

void

run(

char const* host,

char const* port,

char const* target,

int version)

{

// Set SNI Hostname (many hosts need this to handshake successfully)

if(! SSL_set_tlsext_host_name(stream_.native_handle(), host))

{

boost::system::error_code ec{static_cast<int>(::ERR_get_error()), boost::asio::error::get_ssl_category()};

std::cerr << ec.message() << "\n";

return;

}

// Set up an HTTP GET request message

req_.version(version);

req_.method(http::verb::get);

req_.target(target);

req_.set(http::field::host, host);

req_.set(http::field::user_agent, BOOST_BEAST_VERSION_STRING);

// Look up the domain name

resolver_.async_resolve(

host,

port,

std::bind(

&session::on_resolve,

shared_from_this(),

std::placeholders::_1,

std::placeholders::_2));

}

void

on_resolve(

boost::system::error_code ec,

tcp::resolver::results_type results)

{

if(ec)

return fail(ec, "resolve");

// Make the connection on the IP address we get from a lookup

boost::asio::async_connect(

stream_.next_layer(),

results.begin(),

results.end(),

std::bind(

&session::on_connect,

shared_from_this(),

std::placeholders::_1));

}

void

on_connect(boost::system::error_code ec)

{

if(ec)

return fail(ec, "connect");

// Perform the SSL handshake

stream_.async_handshake(

ssl::stream_base::client,

std::bind(

&session::on_handshake,

shared_from_this(),

std::placeholders::_1));

}

void

on_handshake(boost::system::error_code ec)

{

if(ec)

return fail(ec, "handshake");

// Send the HTTP request to the remote host

http::async_write(stream_, req_,

std::bind(

&session::on_write,

shared_from_this(),

std::placeholders::_1,

std::placeholders::_2));

}

void

on_write(

boost::system::error_code ec,

std::size_t bytes_transferred)

{

boost::ignore_unused(bytes_transferred);

if(ec)

return fail(ec, "write");

// Receive the HTTP response

http::async_read(stream_, buffer_, res_,

std::bind(

&session::on_read,

shared_from_this(),

std::placeholders::_1,

std::placeholders::_2));

}

void

on_read(

boost::system::error_code ec,

std::size_t bytes_transferred)

{

boost::ignore_unused(bytes_transferred);

if(ec)

return fail(ec, "read");

// Write the message to standard out

//std::cout << res_ << std::endl;

// Gracefully close the stream

stream_.async_shutdown(

std::bind(

&session::on_shutdown,

shared_from_this(),

std::placeholders::_1));

}

void

on_shutdown(boost::system::error_code ec)

{

if(ec == boost::asio::error::eof)

{

// Rationale:

// http://stackoverflow.com/questions/25587403/boost-asio-ssl-async-shutdown-always-finishes-with-an-error

ec.assign(0, ec.category());

}

if(ec)

return fail(ec, "shutdown");

// If we get here then the connection is closed gracefully

}

};

//up boost-beast-client-async-ssl session code.

struct io_context_runner

{

boost::asio::io_context& ioc;

void operator()()const

{

try{

ioc.run();

}catch(std::exception& e){

fprintf(stderr, "e: %s\n", e.what());

}

}

};

namespace async_http_ssl {

using ::session;

}

#include <thread>

int main(int argc, char *argv[]) {

// The io_context is required for all I/O

boost::asio::io_context ioc;

std::thread t;

try {

// Run the I/O service. The call will return when all work is complete

boost::asio::io_context::work w(ioc);

t = std::thread { io_context_runner{ioc} };

int total_run = 1;

if (argc > 1)

total_run = atoi(argv[1]);

#if 0

auto host = "104.236.162.70"; // IP of isocpp.org

auto port = "443"; //

auto target = "/"; //

#else

auto host = "127.0.0.1";

auto port = "443";

auto target = "/BBB/http_client_async_ssl.cpp";

#endif

std::string const body = ""; //

int version = 11;

// The SSL context is required, and holds certificates

ssl::context ctx{ssl::context::sslv23_client};

// This holds the root certificate used for verification

load_root_certificates(ctx);

typedef std::shared_ptr<async_http_ssl::session> pointer;

for (int i = 0; i < total_run; ++i) {

pointer s = std::make_shared<async_http_ssl::session>(ioc, ctx);

usleep(1000000 / total_run);

s->run(host, port, target, version);

}

} catch (std::exception const &e) {

std::cerr << "Error: " << e.what() << std::endl;

return EXIT_FAILURE;

}

if (t.joinable())

t.join();

// If we get here then the connections have been closed gracefully

}

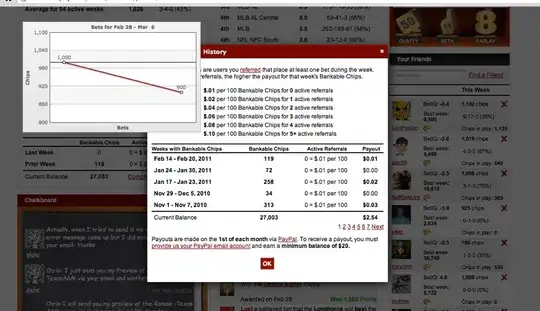

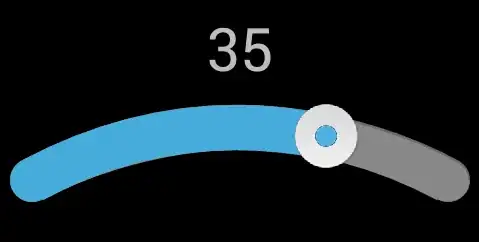

On my system, memory profiling with 1 connection:

With 100 connections:

With 1000 connections:

Analysis

What does it mean? It still seems that Beast is using progressively more memory when sending more requests, right?

Well, no. The problem is that you're starting requests faster than they can be completed. So, the memory load increases mainly because many session instances are extant at a given time. Once completed, they will free the resources automatically (due to the use of shared_ptr<session>).

Making Requests Sequential

To drive the point home, here's a modified version that accepts a on_completion_ handler with the session:

std::function<void()> on_complete_;

// Resolver and stream require an io_context

template <typename Handler>

explicit

session(boost::asio::io_context& ioc, ssl::context& ctx, Handler&& handler)

: resolver_(ioc)

, stream_(ioc, ctx)

, on_complete_(std::forward<Handler>(handler))

{

}

~session() {

if (on_complete_) on_complete_();

}

Now you can rewrite the main program logic as an async operation chain:

struct Tester {

boost::asio::io_context ioc;

boost::optional<boost::asio::io_context::work> work{ioc};

std::thread t { io_context_runner{ioc} };

ssl::context ctx{ssl::context::sslv23_client};

Tester() {

load_root_certificates(ctx);

}

void run(int remaining = 1) {

if (remaining <= 0)

return;

auto s = std::make_shared<session>(ioc, ctx, [=] { run(remaining - 1); });

s->run("127.0.0.1", "443", "/BBB/http_client_async_ssl.cpp", 11);

}

~Tester() {

work.reset();

if (t.joinable()) t.join();

}

};

int main(int argc, char *argv[]) {

Tester tester;

tester.run(argc>1? atoi(argv[1]):1);

}

With this program (Full Code On Coliru), we get much more stable results:

1 request:

100 requests:

1000 requests:

Restoring Throughput

Well that's a bit too conservative, sending many requests might become really slow. How about some concurrency? Easy:

int main(int argc, char *argv[]) {

int const total = argc>1? atoi(argv[1]) : 1;

int const concurrent = argc>2? atoi(argv[2]) : 1;

{

std::vector<Tester> chains(concurrent);

for (auto& chain : chains)

chain.run(total / concurrent);

}

std::cout << "All done\n";

}

That's all! Now, we can have concurrent separate chains of executions servicing ~total requests. See the difference in run time:

$ time ./sotest 1000

All done

real 0m53.295s

user 0m13.124s

sys 0m0.232s

$ time ./sotest 1000 10

All done

real 0m8.808s

user 0m8.884s

sys 0m1.096s

With the memory usage continuing to look healthy: