In simple terms, I'm trying to add columns latitude and longitude from df1 to a smaller DataFrame called df2 by comparing the values from their air_id and hpg_id columns:

The trick to add latitude and longitude to df2 relies on how the comparison is made to df1, which could be one of 3 cases:

- When there is a match between

df2.air_idANDdf1.air_hd; - When there is a match between

df2.hpg_idANDdf1.hpg_hd; - When there is a match between both of them:

[df2.air_id, df2.hpg_id]AND[df1.air_hd, df1.hpg_id];

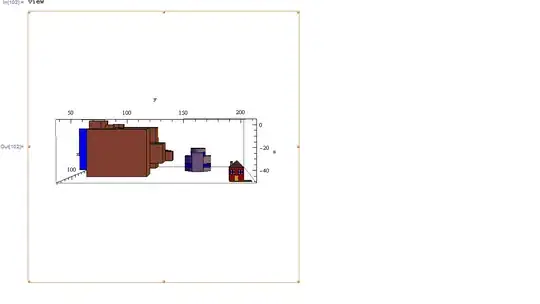

With that in mind, the expected result should be:

Notice how the ignore_me column from df1 was left out of the resulting DataFrame.

Here is the code to setup the DataFrames:

data = { 'air_id' : [ 'air1', '', 'air3', 'air4', 'air2', 'air1' ],

'hpg_id' : [ 'hpg1', 'hpg2', '', 'hpg4', '', '' ],

'latitude' : [ 101.1, 102.2, 103, 104, 102, 101.1, ],

'longitude' : [ 51, 52, 53, 54, 52, 51, ],

'ignore_me' : [ 91, 92, 93, 94, 95, 96 ] }

df1 = pd.DataFrame(data)

display(df1)

data2 = { 'air_id' : [ '', 'air2', 'air3', 'air1' ],

'hpg_id' : [ 'hpg1', 'hpg2', '', '' ] }

df2 = pd.DataFrame(data2)

display(df2)

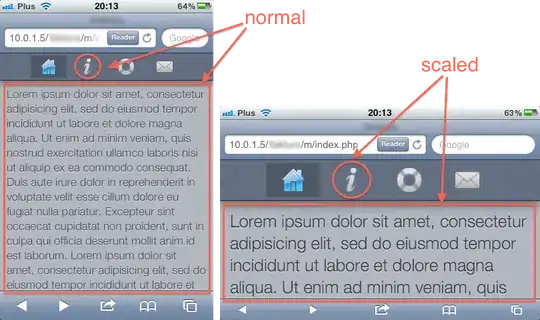

Unfortunately, I'm failing to use merge() for this task. My current result is a DataFrame with all columns from df1 mostly filled with NaNs:

How can I copy these specific columns from df1 using the rules above?