1.Introduction:

So I want to develop a special filter method for uiimages - my idea is to change from one picture all the colors to black except a certain color, which should keep their appearance.

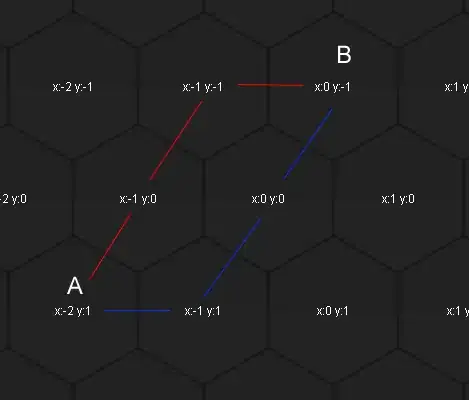

Images are always nice, so look at this image to get what I'd like to achieve:

2.Explanation:

I'd like to apply a filter (algorithm) that is able to find specific colors in an image. The algorithm must be able to replace all colors that are not matching to the reference colors with e.g "black".

I've developed a simple code that is able to replace specific colors (color ranges with threshold) in any image. But tbh this solution doesn't seems to be a fast & efficient way at all!

func colorFilter(image: UIImage, findcolor: String, threshold: Int) -> UIImage {

let img: CGImage = image.cgImage!

let context = CGContext(data: nil, width: img.width, height: img.height, bitsPerComponent: 8, bytesPerRow: 4 * img.width, space: CGColorSpaceCreateDeviceRGB(), bitmapInfo: CGImageAlphaInfo.premultipliedLast.rawValue)!

context.draw(img, in: CGRect(x: 0, y: 0, width: img.width, height: img.height))

let binaryData = context.data!.assumingMemoryBound(to: UInt8.self),

referenceColor = HEXtoHSL(findcolor) // [h, s, l] integer array

for i in 0..<img.height {

for j in 0..<img.width {

let pixel = 4 * (i * img.width + j)

let pixelColor = RGBtoHSL([Int(binaryData[pixel]), Int(binaryData[pixel+1]), Int(binaryData[pixel+2])]) // [h, s, l] integer array

let distance = calculateHSLDistance(pixelColor, referenceColor) // value between 0 and 100

if (distance > threshold) {

let setValue: UInt8 = 255

binaryData[pixel] = setValue; binaryData[pixel+1] = setValue; binaryData[pixel+2] = setValue; binaryData[pixel+3] = 255

}

}

}

let outputImg = context.makeImage()!

return UIImage(cgImage: outputImg, scale: image.scale, orientation: image.imageOrientation)

}

3.Code Information The code above is working quite fine but is absolutely ineffective. Because of all the calculation (especially color conversion, etc.) this code is taking a LONG (too long) time, so have a look at this screenshot:

My question I'm pretty sure there is a WAY simpler solution of filtering a specific color (with a given threshold

#c6456f is similar to #C6476f, ...) instead of looping trough EVERY single pixel to compare it's color.- So what I was thinking about was something like a filter (CIFilter-method) as alternative way to the code on top.

Some Notes

So I do not ask you to post any replies that contain suggestions to use the openCV libary. I would like to develop this "algorithm" exclusively with Swift.

The size of the image from which the screenshot was taken over time had a resolution of 500 * 800px

Thats all

Did you really read this far? - congratulation, however - any help how to speed up my code would be very appreciated! (Maybe theres a better way to get the pixel color instead of looping trough every pixel) Thanks a million in advance :)