I have noticed that tf.nn.softmax_cross_entropy_with_logits_v2(labels, logits) mainly performs 3 operations:

Apply softmax to the logits (y_hat) in order to normalize them:

y_hat_softmax = softmax(y_hat).Compute the cross-entropy loss:

y_cross = y_true * tf.log(y_hat_softmax)Sum over different class for an instance:

-tf.reduce_sum(y_cross, reduction_indices=[1])

The code borrowed from here demonstrates this perfectly.

y_true = tf.convert_to_tensor(np.array([[0.0, 1.0, 0.0],[0.0, 0.0, 1.0]]))

y_hat = tf.convert_to_tensor(np.array([[0.5, 1.5, 0.1],[2.2, 1.3, 1.7]]))

# first step

y_hat_softmax = tf.nn.softmax(y_hat)

# second step

y_cross = y_true * tf.log(y_hat_softmax)

# third step

result = - tf.reduce_sum(y_cross, 1)

# use tf.nn.softmax_cross_entropy_with_logits_v2

result_tf = tf.nn.softmax_cross_entropy_with_logits_v2(labels = y_true, logits = y_hat)

with tf.Session() as sess:

sess.run(result)

sess.run(result_tf)

print('y_hat_softmax:\n{0}\n'.format(y_hat_softmax.eval()))

print('y_true: \n{0}\n'.format(y_true.eval()))

print('y_cross: \n{0}\n'.format(y_cross.eval()))

print('result: \n{0}\n'.format(result.eval()))

print('result_tf: \n{0}'.format(result_tf.eval()))

Output:

y_hat_softmax:

[[0.227863 0.61939586 0.15274114]

[0.49674623 0.20196195 0.30129182]]

y_true:

[[0. 1. 0.]

[0. 0. 1.]]

y_cross:

[[-0. -0.4790107 -0. ]

[-0. -0. -1.19967598]]

result:

[0.4790107 1.19967598]

result_tf:

[0.4790107 1.19967598]

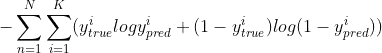

However, the one hot labels includes either 0 or 1, thus the cross entropy for such binary case is formulated as follows shown in here and here:

I write code for this formula in the next cell, the result of which is different from above. My question is which one is better or right? Does tensorflow has function to compute the cross entropy according to this formula also?

y_true = np.array([[0.0, 1.0, 0.0], [0.0, 0.0, 1.0]])

y_hat_softmax_from_tf = np.array([[0.227863, 0.61939586, 0.15274114],

[0.49674623, 0.20196195, 0.30129182]])

comb = np.dstack((y_true, y_hat_softmax_from_tf))

#print(comb)

print('y_hat_softmax_from_tf: \n{0}\n'.format(y_hat_softmax_from_tf))

print('y_true: \n{0}\n'.format(y_true))

def cross_entropy_fn(sample):

output = []

for label in sample:

if label[0]:

y_cross_1 = label[0] * np.log(label[1])

else:

y_cross_1 = (1 - label[0]) * np.log(1 - label[1])

output.append(y_cross_1)

return output

y_cross_1 = np.array([cross_entropy_fn(sample) for sample in comb])

print('y_cross_1: \n{0}\n'.format(y_cross_1))

result_1 = - np.sum(y_cross_1, 1)

print('result_1: \n{0}'.format(result_1))

output

y_hat_softmax_from_tf:

[[0.227863 0.61939586 0.15274114]

[0.49674623 0.20196195 0.30129182]]

y_true:

[[0. 1. 0.]

[0. 0. 1.]]

y_cross_1:

[[-0.25859328 -0.4790107 -0.16574901]

[-0.68666072 -0.225599 -1.19967598]]

result_1:

[0.90335299 2.11193571]