Hello, I have one doubt:

I have implemented a raycaster and I have been testing it manually, however I do not know why on most of the clicks which I made on the 3D model, it did not get the intersection point.

First I will show you the points I clicked on, then the points which were logged in the web console then the code I have implemented and finally the web structure:

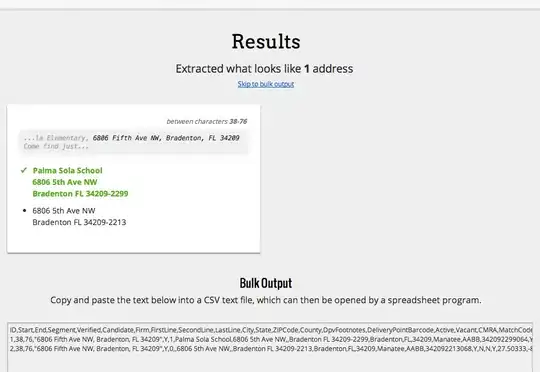

I have clicked on those eight points:

And the results are:

[]length: 0__proto__: Array(0)

[]length: 0__proto__: Array(0)

[]length: 0__proto__: Array(0)

[]length: 0__proto__: Array(0)

point: Vector3 x:--99.34871894866089 y:67 z:0

point: Vector3 x: -126.50880038786315 y: 73.48094335146214 z: -5.684341886080802

[]length: 0__proto__: Array(0)

[]length: 0__proto__: Array(0)

Here we have the implemented code, the important part is the onDocumentMouseDown function:

if ( ! Detector.webgl ) Detector.addGetWebGLMessage();

// global variables for this scripts

let OriginalImg,

SegmentImg;

var mouse = new THREE.Vector2();

var raycaster = new THREE.Raycaster();

var mousePressed = false;

init();

animate();

// initilize the page

function init ()

{

let filename = "models/nrrd/columna01.nrrd"; // change your nrrd file

let idDiv = 'original';

OriginalImg = new InitCanvas(idDiv, filename );

OriginalImg.init();

console.log(OriginalImg);

filename = "models/nrrd/columnasegmentado01.nrrd"; // change your nrrd file

idDiv = 'segment';

SegmentImg = new InitCanvas(idDiv, filename );

SegmentImg.init();

}

document.addEventListener( 'mousedown', onDocumentMouseDown, false );

document.addEventListener( 'mouseup', onDocumentMouseUp, false );

function onDocumentMouseDown( event ) {

mousePressed = true;

mouse.x = ( event.clientX / window.innerWidth ) * 2 - 1;

mouse.y = - ( event.clientY / window.innerHeight ) * 2 + 1;

raycaster.setFromCamera( mouse.clone(), OriginalImg.camera );

var objects = raycaster.intersectObjects(OriginalImg.scene.children);

console.log(objects);

}

function onDocumentMouseUp( event ) { mousePressed = false}

function animate() {

requestAnimationFrame( animate );

OriginalImg.animate();

SegmentImg.animate();

}

Here we see the web structure:

I suspect that because of the canvas have an offset into the window, the points are not being effectively gotten from the areas we clicked on.

I have also read:

Debug threejs raycaster mouse coordinates

How do I get the coordinates of a mouse click on a canvas element?

https://threejs.org/docs/#api/core/Raycaster

threejs raycasting does not work

Any help would be appreciated both link to further reading, theoretical suggestions and code examples.

EDIT:

1) I have opened a new thread with the same topic and more detailed images, graphs, and logs. Here is the link: ThreeJS, raycaster gets strange coordinates when we log the intersection object

2) I followed the answer provided by @TheJim01 here is the current code:

logic.js

if (!Detector.webgl) Detector.addGetWebGLMessage();

// global variables for this scripts

let OriginalImg,

SegmentImg;

var mouse = new THREE.Vector2();

var raycaster = new THREE.Raycaster();

var mousePressed = false;

var clickCount = 0;

init();

animate();

// initilize the page

function init() {

let filename = "models/nrrd/columna01.nrrd"; // change your nrrd file

let idDiv = 'original';

OriginalImg = new InitCanvas(idDiv, filename);

OriginalImg.init();

console.log(OriginalImg);

filename = "models/nrrd/columnasegmentado01.nrrd"; // change your nrrd file

idDiv = 'segment';

SegmentImg = new InitCanvas(idDiv, filename);

SegmentImg.init();

}

let originalCanvas = document.getElementById('original');

originalCanvas.addEventListener('mousedown', onDocumentMouseDown, false);

originalCanvas.addEventListener('mouseup', onDocumentMouseUp, false);

function onDocumentMouseDown(event) {

mousePressed = true;

clickCount++;

mouse.x = ( event.offsetX / window.innerWidth ) * 2 - 1;

console.log('Mouse x position is: ', mouse.x, 'the click number was: ', clickCount);

mouse.y = -( event.offsetY / window.innerHeight ) * 2 + 1;

console.log('Mouse Y position is: ', mouse.y);

raycaster.setFromCamera(mouse.clone(), OriginalImg.camera);

var objects = raycaster.intersectObjects(OriginalImg.scene.children);

console.log(objects);

}

function onDocumentMouseUp(event) {

mousePressed = false

}

function animate() {

requestAnimationFrame(animate);

OriginalImg.animate();

SegmentImg.animate();

}

InitCanvas.js

// this class handles the load and the canva for a nrrd

// Using programming based on prototype: https://javascript.info/class

// This class should be improved:

// - Canvas Width and height

InitCanvas = function ( IdDiv, Filename ) {

this.IdDiv = IdDiv;

this.Filename = Filename

}

InitCanvas.prototype = {

constructor: InitCanvas,

init: function() {

this.container = document.getElementById( this.IdDiv );

// this should be changed.

debugger;

this.container.innerHeight = 600;

this.container.innerWidth = 800;

//These statenments should be changed to improve the image position

this.camera = new THREE.PerspectiveCamera( 60, this.container.innerWidth / this.container.innerHeight, 0.01, 1e10 );

this.camera.position.z = 300;

let scene = new THREE.Scene();

scene.add( this.camera );

// light

let dirLight = new THREE.DirectionalLight( 0xffffff );

dirLight.position.set( 200, 200, 1000 ).normalize();

this.camera.add( dirLight );

this.camera.add( dirLight.target );

// read file

let loader = new THREE.NRRDLoader();

loader.load( this.Filename , function ( volume ) {

//z plane

let sliceZ = volume.extractSlice('z',Math.floor(volume.RASDimensions[2]/4));

debugger;

this.container.innerWidth = sliceZ.iLength;

this.container.innerHeight = sliceZ.jLength;

scene.add( sliceZ.mesh );

}.bind(this) );

this.scene = scene;

// renderer

this.renderer = new THREE.WebGLRenderer( { alpha: true } );

this.renderer.setPixelRatio( this.container.devicePixelRatio );

debugger;

this.renderer.setSize( this.container.innerWidth, this.container.innerHeight );

// add canvas in container

this.container.appendChild( this.renderer.domElement );

},

animate: function () {

this.renderer.render( this.scene, this.camera );

}

}

index.html

<!DOCTYPE html>

<html lang="en">

<head>

<title>Prototype: three.js without react.js</title>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, user-scalable=no, minimum-scale=1.0, maximum-scale=1.0">

<link rel="stylesheet" href="css/styles.css">

<!-- load the libraries and js -->

<script src="js/libs/three.js"></script>

<script src="js/Volume.js"></script>

<script src="js/VolumeSlice.js"></script>

<script src="js/loaders/NRRDLoader.js"></script>

<script src="js/Detector.js"></script>

<script src="js/libs/stats.min.js"></script>

<script src="js/libs/gunzip.min.js"></script>

<script src="js/libs/dat.gui.min.js"></script>

<script src="js/InitCanvas.js"></script>

</head>

<body>

<div id="info">

<h1>Prototype: three.js without react.js</h1>

</div>

<!-- two canvas -->

<div class="row">

<div class="column" id="original">

</div>

<div class="column" id="segment">

</div>

</div>

<script src="js/logic.js"></script>

</body>

</html>

I have sseen that it indeed works differently. Until now I know that the origin of canvas coordinates, would be the red one in fullscreen mode, and the green one when we open the Chrome dev tools on the right:

In addition the zone where raycaster interepts ThreeJS model, is the red area in fullscreen and the green one when we open the Mozilla dev tools below:

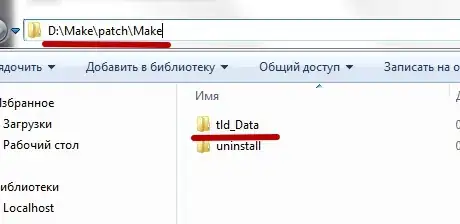

3) Currently the canvas is created from a parent div named column which is:

And the canvas being created in it is 800x600

How could we achieve that the raycaster's intercept zone become canvas' model's size?

5) To be able to solve the difficulty for myself I have studied this good SO post: THREE.js Ray Intersect fails by adding div

However I see that in the post I linked, @WestLangley uses clientX, Y and here in the answers section @TheJim01 advices to use offsetX, Y.

Also as I am beginner with ThreeJS, and I have been learning JS some time I have some difficulties:

How is the origin of coordinates handled in the browser?

What is the origin of coordinates in three.js?

How are both related?

Why most of the articles use this expression?:

mouse.x = ( event.offsetX / window.innerWidth ) * 2 - 1;

Why do we need to divide by window.innerWidth? By we do * 2 - 1?

6) I ask all of that because I would like to do a web application where we could gather the point where the user clicked on the left canvas, and then we change the color of the same part on the right canvas, and we display some information about it as the name and description.

So then gathering the mouse click position with ThreeJS is important to be able to use that to change the color on the right canvas, and on the clicked part.

7) In addition I have also read:

Update Three.js Raycaster After CSS Tranformation

EDIT2: 22/03/18

I have followed the answer provided by @WestLangley here: THREE.js Ray Intersect fails by adding div

And it allows us to have the raycaster's intersection zone on the canvas' image.

So it solves the question in practice.

However I still not understanding something, for example the relation between browser's and Threejs' coordinates.

Here we see that in the browser and ThreeJS' raycaster's intercepted object, x coordinate is the same, however Y coordinate is different, why?

Also I suspect that browser's origin of coordinates on canvas is on the center:

Is this correct?

I will show the pieces of code I needed to add to make the raycaster's detection area be the same as canvas' image.

First I added in the CSS:

canvas {

width: 200px;

height: 200px;

margin: 100px;

padding: 0px;

position: static; /* fixed or static */

top: 100px;

left: 100px;

}

Then I have added in the logic.js

function onDocumentMouseDown(event) {

mousePressed = true;

clickCount++;

mouse.x = ( ( event.clientX - OriginalImg.renderer.domElement.offsetLeft ) / OriginalImg.renderer.domElement.clientWidth ) * 2 - 1;

mouse.y = - ( ( event.clientY - OriginalImg.renderer.domElement.offsetTop ) / OriginalImg.renderer.domElement.clientHeight ) * 2 + 1

console.log('Mouse x position is: ', mouse.x, 'the click number was: ', clickCount);

console.log('Mouse Y position is: ', mouse.y);

raycaster.setFromCamera(mouse.clone(), OriginalImg.camera);

var objects = raycaster.intersectObjects(OriginalImg.scene.children);

console.log(objects);

}

As you can see above, I have added on mouse x and y the offset Left and Top, divided by the renderer Width / Height.

In addition I have also studied how is the mouse click done in OpenAnatomy:

function onSceneMouseMove(event) {

//check if we are not doing a drag (trackball controls)

if (event.buttons === 0) {

//compute offset due to container position

mouse.x = ( (event.clientX-containerOffset.left) / container.clientWidth ) * 2 - 1;

mouse.y = - ( (event.clientY-containerOffset.top) / container.clientHeight ) * 2 + 1;

needPickupUpdate = true;

}

else {

needPickupUpdate = false;

}

}

Link: https://github.com/mhalle/oabrowser/blob/gh-pages/src/app.js

So we see they use the offset, left and top too, and divided by the width and height, but this time the ones from the container not the renderer.

Also I have studied how they do it in AMI:

function onDoubleClick(event) {

const canvas = event.target.parentElement;

const id = event.target.id;

const mouse = {

x: ((event.clientX - canvas.offsetLeft) / canvas.clientWidth) * 2 - 1,

y: - ((event.clientY - canvas.offsetTop) / canvas.clientHeight) * 2 + 1,

};

Link: https://github.com/FNNDSC/ami/blob/dev/examples/viewers_quadview/viewers_quadview.js

So here we see that instead of the container or even the renderer they use the offset of the canvas itself.

In addition I have studied some official ThreeJS examples, and they look like there is only a fullscreen renderer/scene so then they do not show how to handle various canvas and raycasters in a same web page.

function onDocumentMouseMove( event ) {

event.preventDefault();

mouse.x = ( event.clientX / window.innerWidth ) * 2 - 1;

mouse.y = - ( event.clientY / window.innerHeight ) * 2 + 1;

}

Link: https://github.com/mrdoob/three.js/blob/master/examples/webgl_interactive_cubes.html

function onMouseMove( event ) {

mouse.x = ( event.clientX / renderer.domElement.clientWidth ) * 2 - 1;

mouse.y = - ( event.clientY / renderer.domElement.clientHeight ) * 2 + 1;

raycaster.setFromCamera( mouse, camera );

// See if the ray from the camera into the world hits one of our meshes

var intersects = raycaster.intersectObject( mesh );

// Toggle rotation bool for meshes that we clicked

if ( intersects.length > 0 ) {

helper.position.set( 0, 0, 0 );

helper.lookAt( intersects[ 0 ].face.normal );

helper.position.copy( intersects[ 0 ].point );

}

}

Link: https://github.com/mrdoob/three.js/blob/master/examples/webgl_geometry_terrain_raycast.html

Could you help me please?

Thank you.