I'm using a normal array to render a normal dataset as follows:

glEnableClientState(GL_NORMAL_ARRAY);

glNormalPointer( GL_FLOAT, 0, nArray );

My vertices are rendered as follows:

glEnableClientState( GL_VERTEX_ARRAY );

glVertexPointer( 3, GL_FLOAT, 0, vArray );

glDrawElements( GL_TRIANGLES, iSize, GL_UNSIGNED_INT, iArray );

glDisableClientState( GL_VERTEX_ARRAY );

glDisableClientState( GL_NORMAL_ARRAY );

For the sake of this example, I'm filling my nArray as follows (the error still occurs when normals are calculated correctly):

for(int i = 0; i < nSize * 3; ++i){

nArray[i] = randomNumber();

}

Where randomNumber() is returning a random float number between 0 and 1.

I expect that nArray should be filled with random floats between 0 and 1, and these should be displayed completely randomly on the surface I am generating.

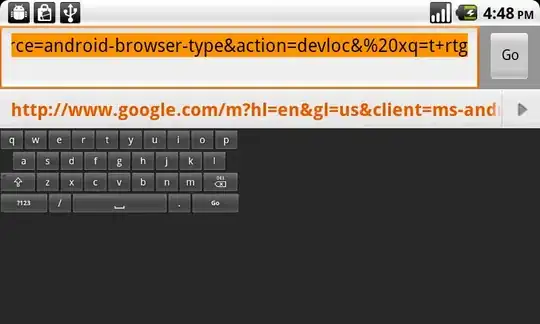

When I render the output, the "normals" are displayed as follows. I do not care that it doesn't calculate a correct normal, but that it seems the normals for two side by side triangles are the same.

Below is a wireframe of the surface to show the separate triangles:

The shade method I am using is: glShadeModel(GL_FLAT);

I expected that each individual triangle would have completely different greyscale values, but that is not the case. Any help on where I am going wrong is appreciated.