I am trying to seek the way making more performant deserialization process in our application. I created simple test below. When I check the results, it seems like JsonConvert.DeserializeObject working much faster after first iteration.

[TestMethod]

public void DeserializeObjectTest()

{

int count = 5;

for (int i = 0; i < count; i++)

{

CookieCache cookieCache = new CookieCache()

{

added = DateTime.UtcNow.AddDays(-1),

VisitorId = Guid.NewGuid().ToString(),

campaigns = new List<string>() { "qqq", "www", "eee" },

target_dt = "3212018",

updated = DateTime.UtcNow

};

Stopwatch stopwatch = Stopwatch.StartNew();

string serializeObject = JsonConvert.SerializeObject(cookieCache);

CookieCache deserializeObject = JsonConvert.DeserializeObject<CookieCache>(serializeObject);

stopwatch.Stop();

double stopwatchElapsedMilliseconds = stopwatch.Elapsed.TotalMilliseconds;

Debug.WriteLine("iteration " + i + ": " + stopwatchElapsedMilliseconds);

}

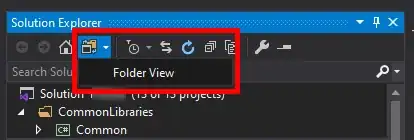

And my results:

I think I am using Stopwatch correctly. So does JSON.NET is using some sort of internal caching or optimization process on deserialization on following calls?

Since this is a web application, of course, I am getting similar results in my logs (280.6466) for every single web request.

So am I missing something on my test? Or is it expected behavior?