I have written the program (below) to:

- read a huge text file as

pandas dataframe - then

groupbyusing a specific column value to split the data and store as list of dataframes. - then pipe the data to

multiprocess Pool.map()to process each dataframe in parallel.

Everything is fine, the program works well on my small test dataset. But, when I pipe in my large data (about 14 GB), the memory consumption exponentially increases and then freezes the computer or gets killed (in HPC cluster).

I have added codes to clear the memory as soon as the data/variable isn't useful. I am also closing the pool as soon as it is done. Still with 14 GB input I was only expecting 2*14 GB memory burden, but it seems like lot is going on. I also tried to tweak using chunkSize and maxTaskPerChild, etc but I am not seeing any difference in optimization in both test vs. large file.

I think improvements to this code is/are required at this code position, when I start multiprocessing.

p = Pool(3) # number of pool to run at once; default at 1

result = p.map(matrix_to_vcf, list(gen_matrix_df_list.values()))

but, I am posting the whole code.

Test example: I created a test file ("genome_matrix_final-chr1234-1mb.txt") of upto 250 mb and ran the program. When I check the system monitor I can see that memory consumption increased by about 6 GB. I am not so clear why so much memory space is taken by 250 mb file plus some outputs. I have shared that file via drop box if it helps in seeing the real problem. https://www.dropbox.com/sh/coihujii38t5prd/AABDXv8ACGIYczeMtzKBo0eea?dl=0

Can someone suggest, How I can get rid of the problem?

My python script:

#!/home/bin/python3

import pandas as pd

import collections

from multiprocessing import Pool

import io

import time

import resource

print()

print('Checking required modules')

print()

''' change this input file name and/or path as need be '''

genome_matrix_file = "genome_matrix_final-chr1n2-2mb.txt" # test file 01

genome_matrix_file = "genome_matrix_final-chr1234-1mb.txt" # test file 02

#genome_matrix_file = "genome_matrix_final.txt" # large file

def main():

with open("genome_matrix_header.txt") as header:

header = header.read().rstrip('\n').split('\t')

print()

time01 = time.time()

print('starting time: ', time01)

'''load the genome matrix file onto pandas as dataframe.

This makes is more easy for multiprocessing'''

gen_matrix_df = pd.read_csv(genome_matrix_file, sep='\t', names=header)

# now, group the dataframe by chromosome/contig - so it can be multiprocessed

gen_matrix_df = gen_matrix_df.groupby('CHROM')

# store the splitted dataframes as list of key, values(pandas dataframe) pairs

# this list of dataframe will be used while multiprocessing

gen_matrix_df_list = collections.OrderedDict()

for chr_, data in gen_matrix_df:

gen_matrix_df_list[chr_] = data

# clear memory

del gen_matrix_df

'''Now, pipe each dataframe from the list using map.Pool() '''

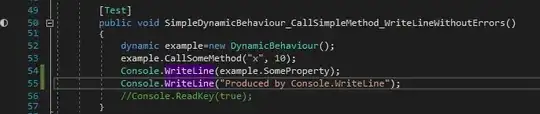

p = Pool(3) # number of pool to run at once; default at 1

result = p.map(matrix_to_vcf, list(gen_matrix_df_list.values()))

del gen_matrix_df_list # clear memory

p.close()

p.join()

# concat the results from pool.map() and write it to a file

result_merged = pd.concat(result)

del result # clear memory

pd.DataFrame.to_csv(result_merged, "matrix_to_haplotype-chr1n2.txt", sep='\t', header=True, index=False)

print()

print('completed all process in "%s" sec. ' % (time.time() - time01))

print('Global maximum memory usage: %.2f (mb)' % current_mem_usage())

print()

'''function to convert the dataframe from genome matrix to desired output '''

def matrix_to_vcf(matrix_df):

print()

time02 = time.time()

# index position of the samples in genome matrix file

sample_idx = [{'10a': 33, '10b': 18}, {'13a': 3, '13b': 19},

{'14a': 20, '14b': 4}, {'16a': 5, '16b': 21},

{'17a': 6, '17b': 22}, {'23a': 7, '23b': 23},

{'24a': 8, '24b': 24}, {'25a': 25, '25b': 9},

{'26a': 10, '26b': 26}, {'34a': 11, '34b': 27},

{'35a': 12, '35b': 28}, {'37a': 13, '37b': 29},

{'38a': 14, '38b': 30}, {'3a': 31, '3b': 15},

{'8a': 32, '8b': 17}]

# sample index stored as ordered dictionary

sample_idx_ord_list = []

for ids in sample_idx:

ids = collections.OrderedDict(sorted(ids.items()))

sample_idx_ord_list.append(ids)

# for haplotype file

header = ['contig', 'pos', 'ref', 'alt']

# adding some suffixes "PI" to available sample names

for item in sample_idx_ord_list:

ks_update = ''

for ks in item.keys():

ks_update += ks

header.append(ks_update+'_PI')

header.append(ks_update+'_PG_al')

#final variable store the haplotype data

# write the header lines first

haplotype_output = '\t'.join(header) + '\n'

# to store the value of parsed the line and update the "PI", "PG" value for each sample

updated_line = ''

# read the piped in data back to text like file

matrix_df = pd.DataFrame.to_csv(matrix_df, sep='\t', index=False)

matrix_df = matrix_df.rstrip('\n').split('\n')

for line in matrix_df:

if line.startswith('CHROM'):

continue

line_split = line.split('\t')

chr_ = line_split[0]

ref = line_split[2]

alt = list(set(line_split[3:]))

# remove the alleles "N" missing and "ref" from the alt-alleles

alt_up = list(filter(lambda x: x!='N' and x!=ref, alt))

# if no alt alleles are found, just continue

# - i.e : don't write that line in output file

if len(alt_up) == 0:

continue

#print('\nMining data for chromosome/contig "%s" ' %(chr_ ))

#so, we have data for CHR, POS, REF, ALT so far

# now, we mine phased genotype for each sample pair (as "PG_al", and also add "PI" tag)

sample_data_for_vcf = []

for ids in sample_idx_ord_list:

sample_data = []

for key, val in ids.items():

sample_value = line_split[val]

sample_data.append(sample_value)

# now, update the phased state for each sample

# also replacing the missing allele i.e "N" and "-" with ref-allele

sample_data = ('|'.join(sample_data)).replace('N', ref).replace('-', ref)

sample_data_for_vcf.append(str(chr_))

sample_data_for_vcf.append(sample_data)

# add data for all the samples in that line, append it with former columns (chrom, pos ..) ..

# and .. write it to final haplotype file

sample_data_for_vcf = '\t'.join(sample_data_for_vcf)

updated_line = '\t'.join(line_split[0:3]) + '\t' + ','.join(alt_up) + \

'\t' + sample_data_for_vcf + '\n'

haplotype_output += updated_line

del matrix_df # clear memory

print('completed haplotype preparation for chromosome/contig "%s" '

'in "%s" sec. ' %(chr_, time.time()-time02))

print('\tWorker maximum memory usage: %.2f (mb)' %(current_mem_usage()))

# return the data back to the pool

return pd.read_csv(io.StringIO(haplotype_output), sep='\t')

''' to monitor memory '''

def current_mem_usage():

return resource.getrusage(resource.RUSAGE_SELF).ru_maxrss / 1024.

if __name__ == '__main__':

main()

Update for bounty hunters:

I have achieved multiprocessing using Pool.map() but the code is causing a big memory burden (input test file ~ 300 mb, but memory burden is about 6 GB). I was only expecting 3*300 mb memory burden at max.

- Can somebody explain, What is causing such a huge memory requirement for such a small file and for such small length computation.

- Also, i am trying to take the answer and use that to improve multiprocess in my large program. So, addition of any method, module that doesn't change the structure of computation part (CPU bound process) too much should be fine.

- I have included two test files for the test purposes to play with the code.

- The attached code is full code so it should work as intended as it is when copied-pasted. Any changes should be used only to improve optimization in multiprocessing steps.