I am converting some code from Matlab to Python and found that I was getting different results from scipy.interpolate.griddata than from Matlab scatteredInterpolant. After much research and experimentation I found that the interplation results from scipy.interpolate.griddata seem to depend on the size of the data set provided. There seem to be thresholds that cause the interpolated value to change. Is this a bug? OR Can someone explain the algorithm used that would cause this. Here is code that demonstrates the problem.

import numpy as np

from scipy import interpolate

# This code provides a simple example showing that the interpolated value

# for the same location changes depending on the size of the input data set.

# Results of this example show that the interpolated value changes

# at repeat 10 and 300.

def compute_missing_value(data):

"""Compute the missing value example function."""

# Indices for valid x, y, and z data

# In this example x and y are simply the column and row indices

valid_rows, valid_cols = np.where(np.isnan(data) == False)

valid_data = data[np.isnan(data) == False]

interpolated_value = interpolate.griddata(np.array((valid_rows,

valid_cols)).T, valid_data, (2, 2), method='linear')

print('Size=', data.shape,' Value:', interpolated_value)

# Sample data

data = np.array([[0.2154, 0.1456, 0.1058, 0.1918],

[-0.0398, 0.2238, -0.0576, 0.3841],

[0.2485, 0.2644, 0.2639, 0.1345],

[0.2161, 0.1913, 0.2036, 0.1462],

[0.0540, 0.3310, 0.3674, 0.2862]])

# Larger data sets are created by tiling the original data.

# The location of the invalid data to be interpolated is maintained at 2,2

repeat_list =[1, 9, 10, 11, 30, 100, 300]

for repeat in repeat_list:

new_data = np.tile(data, (1, repeat))

new_data[2,2] = np.nan

compute_missing_value(new_data)

The results are:

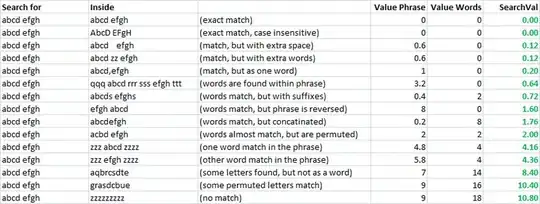

Size= (5, 4) Value: 0.07300000000000001 Size= (5, 36) Value: 0.07300000000000001 Size= (5, 40) Value: 0.19945000000000002 Size= (5, 44) Value: 0.07300000000000001 Size= (5, 120) Value: 0.07300000000000001 Size= (5, 400) Value: 0.07300000000000001 Size= (5, 1200) Value: 0.19945000000000002