I want to implement parallel processing in yasm program using POSIX thread (or simply pthread) library.

Code

Here is the most important part of my program.

section .data

pThreadID1 dq 0

pThreadID2 dq 0

MAX: dq 100000000

value: dd 0

section .bss

extern pthread_create

extern pthread_join

section .text

global main

main:

;create the first thread with id = pThreadID1

mov rdi, pThreadID1

mov rsi, NULL

mov rdx, thread1

mov rcx, NULL

call pthread_create

;join the 1st thread

mov rdi, qword [pThreadID1]

mov rsi, NULL

call pthread_join

;create the second thread with id = pThreadID2

mov rdi, pThreadID2

mov rsi, NULL

mov rdx, thread2

mov rcx, NULL

call pthread_create

;join the 2nd thread

mov rdi, qword [pThreadID2]

mov rsi, NULL

call pthread_join

;print value block

where thread1 contains loop in which value is being incremented by one MAX/2 times:

global thread1

thread1:

mov rcx, qword [MAX]

shr rcx, 1

thread1.loop:

mov eax, dword [value]

inc eax

mov dword [value], eax

loop thread1.loop

ret

and thread2 is similar.

NOTE: thread1 and thread2 share the variable value.

Result

I assemble and compile the above program as follows:

yasm -g dwarf2 -f elf64 Parallel.asm -l Parallel.lst

gcc -g Parallel.o -lpthread -o Parallel

Then I use time command in order to know the elapsed execution time:

time ./Parallel

And I get

value: +100000000

real 0m0.482s

user 0m0.472s

sys 0m0.000s

Problem

OK. In the program above I create one thread wait until it has been finished and only then create the second one. Not the best "threading", isn't it? So I change the order in the program as follows:

;create thread1

;create thread2

;join thread1

;join thread2

I expect that elapsed time will be less in this case but I get

value: +48634696

real 0m2.403s

user 0m4.772s

sys 0m0.000s

I understand why value is not equal to MAX but what I do not understand is why in this case the elapsed time is significantly more? Am I missing something?

EDIT

I decided to exclude overlap between thread1 and thread2 by using different variables for each of them and then just add the results. In this case "parallel" order gives less elapsed time (compared to the previous result) but, anyway, greater than "series" order.

Code

Only changes shown

Data

Now there are two variables --- one for each of threads.

section .data

value1: dd 0

value2: dd 0

Threads

Each thread is responsible for incrementing of its own value.

global thread1

thread1:

mov rcx, qword [MAX]

shr rcx, 1

thread1.loop:

mov eax, dword [value1]

inc eax

mov dword [value1], eax

loop thread1.loop

ret

thread2 is similar (replace 1 by 2).

Getting final result

Assuming that comments represent corresponding blocks of code from the Code section in the beginning of the question the program is the following.

Parallel order;create thread1

;create thread1

;join thread1

;join thread2

mov eax, dword [value]

add eax, dword [value1]

add eax, dword [value2]

mov dword [value], eax

value: +100000000

Performance counter stats for './Parallel':

3078.140527 cpu-clock (msec)

1.586070821 seconds time elapsed

;create thread1

;join thread1

;create thread2

;join thread2

mov eax, dword [value]

add eax, dword [value1]

add eax, dword [value2]

mov dword [value], eax

value: +100000000

Performance counter stats for './Parallel':

508.757321 cpu-clock (msec)

0.509709406 seconds time elapsed

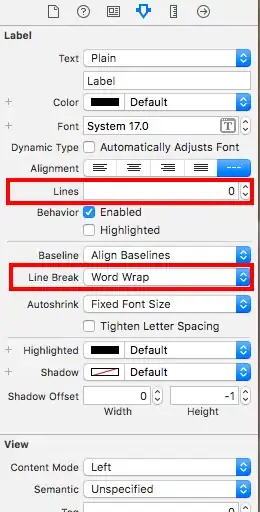

UPDATE

I've drawn a simple graph that reflects time dependencies against MAX value for 4 different modes.