I've been studying DDD for a while, and stumbled into design patterns like CQRS, and Event sourcing (ES). These patterns can be used to help achieving some concepts of DDD with less effort.

Then I started to develop a simple software to implement all these concepts. And started to imagine possible failure paths.

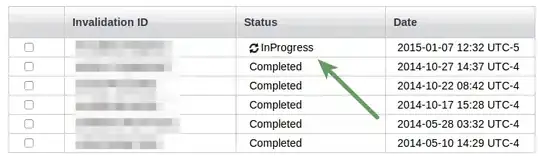

Just to clarify my architecture, the following Image describes one request coming from the front end and reaching the controller I'm the back end (for simplicity I've ignored all filters, binders).

- the actor sends a form with the amount of money he wants to withdraw from one account.

- the controller passes the view model to the application layer where it will be transformed into one command

- the application layer opens one Unit of Work (UOW) map the VM to the command and send the command to the dispatcher.

- the dispatcher finds the corresponding aggregate class that know how to handle the command (account) and asks for the factory for the specific instance of the account.

- the factory creates a new instance of account and request all the events from the event store.

- the event store returns all events of the account.

- the factory sends all events to the aggregate, so that it will be with its internal state correct. And return the instance of the account.

- the dispatcher sends the command to the account so it can be handled.

- the account check if it has enough money to do the withdrawal. And if it has, it sends one new event "MoneyWithdrawnEvent".

- this event is handled by the aggregate (account) altering its internal state.

- the application layer closes the UOW and when it does, the UOW checks all loaded aggregates to check if they have new events to save to the event store. If there are, it sends the events to the repository.

- the repository persists the events to the eventstore.

There are many layers that can be added, for example: caching of aggregates, caching of events, snapshots, etc.

Sometimes ES can be used in parallel to a relational database. So that, when the UOW saves the new events that have happened, it also persists the aggregates to the relational database.

One of the benefits of ES is that it has one central source of truth, the event store. So, even if the models in memory or even in the relational database gets corrupted, we can rebuild the model from the events.

And having this source of truth, we can build other systems that can consume the events in a different way to form a different model.

However, for this to work, we need the source of truth to be clean and not corrupted. Otherwise all these benefits won't exist.

That said, if we consider concurrency in the architecture described in the image, there can be some issues:

- if the actor sends the form twice to the backend in a sort period, and the back end starts two threads (one for each request), then they will call two times the application layer, and start two UOW, and so on. This can cause two events to be stored in the event store.

This problem can be handled in many different places:

The front End can control which user/actor can do what action and how many times.

The Dispatcher can have one cache of all commands that are being handled, and if there a command that refers to the same aggregate (account) it throws an Exception.

The Repository can Create a new instance of the aggregate and run all events from event store just before saving to check if the version is still the same as the one fetched in step 7.

Problems with each solution:

Front End

- The user can bypass this constrains by editing some javascript.

- If there are multiple sessions opened (e.g. different browsers), there would be necessary some static field holding reference to all opened sessions. and it would be necessary to lock some static variable to access this field.

- If there are multiple Servers for the specific action being performed (horizontal scaling), this static field would not work, because it would be necessary to share this across all servers. So, some layer would be necessary (e.g. Redis).

Command Cache

For this solution to work, it would be necessary to lock some static variable of the cache of commands when reading and when writing to it.

If there are multiple Servers for the specific use case of the application layer being performed (horizontal scaling), this static cache would not work, because it would be necessary to share this across all servers. So, some layer would be necessary (e.g. Redis).

Repository Version check

For this solution to work, it would be necessary to lock some static variable before doing the check (version of Database equals the version fetched in step 7) and saving.

If the system was distributed (horizontal scale), it would be necessary to lock the event store. Because, otherwise, both process could pass the check (version of Database equals the version fetched in step 7) and then one saves and then the other saves. And depending on the technology, it is not possible to lock the event store. So, there would be another layer to serialize every access to the event store and add the possibility to lock the store.

This solutions that lock a static variable are somewhat OK, because they are local variables and very fast. However, depending on something like Redis adds some large latencies. And even more if we talk about locking the access to databases (event store). And even more, if this has to be done through one other service.

I would like to know if there is any other possible solution to handle this problem, because this is a major problem (corruption on the event store) and if there is no way around it the whole concept seems to be flawed.

I'm open to any change in the architecture. If for instance, one solution is to add one event Bus, so that everything gets funneled through it, it's fine, but I can't see this solving the problem.

Other point that I'm not familiar with is Kafka. I don’t know if there is some solution that Kafka provides for this problem.