tl;dr You've actually got the right answer! You are simply comparing flops with multiply accumulates (from the paper) and therefore need to divide by two.

If you're using Keras, then the code you listed is slightly over-complicating things...

Let model be any compiled Keras model. We can arrive at the flops of the model with the following code.

import tensorflow as tf

import keras.backend as K

def get_flops():

run_meta = tf.RunMetadata()

opts = tf.profiler.ProfileOptionBuilder.float_operation()

# We use the Keras session graph in the call to the profiler.

flops = tf.profiler.profile(graph=K.get_session().graph,

run_meta=run_meta, cmd='op', options=opts)

return flops.total_float_ops # Prints the "flops" of the model.

# .... Define your model here ....

# You need to have compiled your model before calling this.

print(get_flops())

However, when I look at my own example (not Mobilenet) that I did on my computer, the printed out total_float_ops was 2115 and I had the following results when I simply printed the flops variable:

[...]

Mul 1.06k float_ops (100.00%, 49.98%)

Add 1.06k float_ops (50.02%, 49.93%)

Sub 2 float_ops (0.09%, 0.09%)

It's pretty clear that the total_float_ops property takes into consideration multiplication, addition and subtraction.

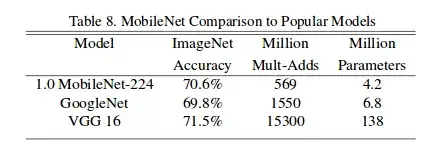

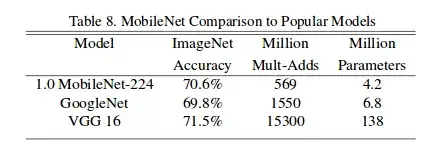

I then looked back at the MobileNets example, looking through the paper briefly, I found the implementation of MobileNet that is the default Keras implementation based on the number of parameters:

The first model in the table matches the result you have (4,253,864) and the Mult-Adds are approximately half of the flops result that you have. Therefore you have the correct answer, it's just you were mistaking flops for Mult-Adds (aka multiply accumulates or MACs).

If you want to compute the number of MACs you simply have to divide the result from the above code by two.

Important Notes

Keep the following in mind if you are trying to run the code sample:

- The code sample was written in 2018 and doesn't work with tensorflow version 2. See @driedler 's answer for a complete example of tensorflow version 2 compatibility.

- The code sample was originally meant to be run once on a compiled model... For a better example of using this in a way that does not have side effects (and can therefore be run multiple times on the same model), see @ch271828n 's answer.