I have a emr cluster with one master node and four worker nodes, each with 4 cores, 16gb RAM and four 80gb Hard Disks. My problem is when I tried to train a tree-based model such as Decision Tree, Random Forest or Gradient Boosting, the training process cannot be done and Yarn/Spark complained out of disk space error.

Here is the code that I tried to run:

from pyspark.ml.regression import DecisionTreeRegressor

from pyspark.ml.regression import RandomForestRegressor

from pyspark.ml.evaluation import RegressionEvaluator

from pyspark.ml.tuning import ParamGridBuilder, CrossValidator

rfr = DecisionTreeRegressor(featuresCol="features", labelCol="age")

cvModel = rfr.fit(age_training_data)

The process always stuck at MapPartitionsRDD map at BaggedPoint.scala step. I tried to run df -h command to examine the disk space. Before the process started, only 1 or 2% or space was used. But when I started the training process, 100% was used for at two hard disks. This problem occurs in not just Decision Tree, but also Random Forest and Gradient Boosting too. I was able to run Logistic Regression on the same dataset without any problem.

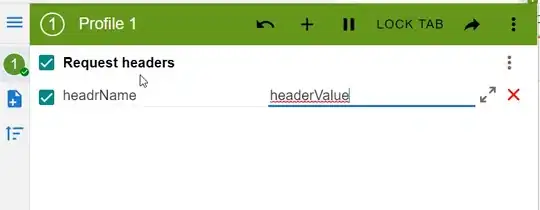

Highly appreciated if anyone knows the possible cause of this. Please take a look at the image of the stage which the error happens. Thank you!

UPDATE:Here is the setting of the spark-defaults.conf:

spark.history.fs.cleaner.enabled true

spark.eventLog.enabled true

spark.driver.extraLibraryPath /usr/lib/hadoop-current/lib/native

spark.executor.extraLibraryPath /usr/lib/hadoop-current/lib/native

spark.driver.extraJavaOptions -Dlog4j.ignoreTCL=true

spark.executor.extraJavaOptions -Dlog4j.ignoreTCL=true

spark.hadoop.yarn.timeline-service.enabled false

spark.driver.memory 8g

spark.yarn.driver.memoryOverhead 7g

spark.driver.cores 3

spark.executor.memory 10g

spark.yarn.executor.memoryOverhead 2048m

spark.executor.instances 4

spark.executor.cores 2

spark.default.parallelism 48

spark.yarn.max.executor.failures 32

spark.network.timeout 100000000s

spark.rpc.askTimeout 10000000s

spark.executor.heartbeatInterval 100000000s

spark.ui.view.acls *

#spark.serializer org.apache.spark.serializer.KryoSerializer

spark.executor.extraJavaOptions -XX:+UseG1GC

As for the data, it has around 120000000 records and the estimated size of the rdd is 1925055624 bytes (which I do not know if it is accurate as I follow here to get the estimated size).

I also found out the space is mostly used up by the temporary data blocks that are written to the machine during the training process, which seems to happen only when I used the tree-based model.