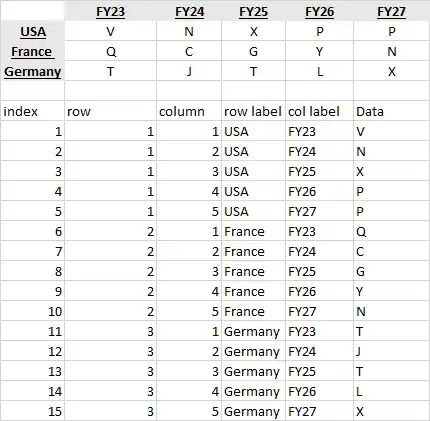

I just started to play with Spark 2+ (2.3 version) and I observed something strange when looking at Spark UI. I have a list of directories in HDFS cluster containing in total 24000 small files.

When I want to run a Spark action on them, Spark 1.5 generates an individual task for each input file, as I was used until now. I know that each HDFS block (in my case one small file is one block) generate one partition in Spark and each partition is processed by an individual task.

Also, the command my_dataframe.rdd.getNumPartitions() outputs 24000.

Now about Spark 2.3

On the same input, command my_dataframe.rdd.getNumPartitions() outputs 1089. The Spark UI also generate 1089 tasks for my Spark action. You can see also that the number of generated jobs is bigger in spark 2.3 then 1.5

The code is the same for both Spark versions (I needed to change a little the dataframe, paths and column names, because it's code from work):

%pyspark

dataframe = sqlContext.\

read.\

parquet(path_to_my_files)

dataframe.rdd.getNumPartitions()

dataframe.\

where((col("col1") == 21379051) & (col("col2") == 2281643649) & (col("col3") == 229939942)).\

select("col1", "col2", "col3").\

show(100, False)

Here is the physical plan generated by

dataframe.where(...).select(...).explain(True)

Spark 1.5

== Physical Plan ==

Filter (((col1 = 21379051) && (col2 = 2281643649)) && (col3 = 229939942))

Scan ParquetRelation[hdfs://cluster1ns/path_to_file][col1#27,col2#29L,col3#30L]

Code Generation: true

Spark 2.3

== Physical Plan ==

*(1) Project [col1#0, col2#2L, col3#3L]

+- *(1) Filter (((isnotnull(col1#0) && (col1#0 = 21383478)) && (col2 = 2281643641)) && (col3 = 229979603))

+- *(1) FileScan parquet [col1,col2,col3] Batched: false, Format: Parquet, Location: InMemoryFileIndex[hdfs://cluster1ns/path_to_file..., PartitionFilters: [], PushedFilters: [IsNotNull(col1)], ReadSchema: struct<col1:bigint,col2:bigint,col3:bigint>....

Above jobs was generated from zeppelin using pyspark. Is there anyone else who met this situation with spark 2.3 ? I do have to say that I like the new way to process multiple small files, but I would like also to have knowledge about possible internal Spark changes.

I searched on internet, newest book "Spark the definitive guide" but didn't find any information about a new way of Spark to generate the physical plan for jobs.

If you have any links or information, would be interesting to read. Thanks!