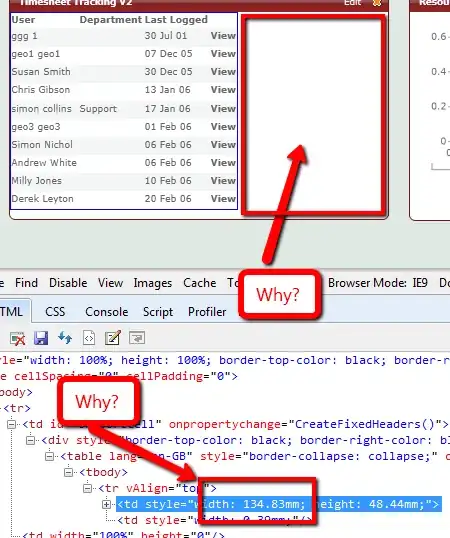

I am trying to detect these price labels text which is always clearly preprocessed. Although it can easily read the text written above it, it fails to detect price values. I am using python bindings pytesseract although it also fails to read from the CLI commands. Most of the time it tries to recognize the part where the price as one or two characters.

Sample 1:

tesseract D:\tesseract\tesseract_test_images\test.png output

And the output of the sample image is this.

je Beutel

13

However if I crop and stretch the price to look like they are seperated and are the same font size, output is just fine.

Processed image(cropped and shrinked price):

je Beutel

1,89

How do get OCR tesseract to work as I intended, as I will be going over a lot of similar images?

Edit: Added more price tags:

sample5 sample6 sample7

sample5 sample6 sample7