The JSON file I get is from below code:

import jsonpickle

import tweepy

import pandas as pd

consumer_key = "xxxx"

consumer_secret = "xxxx"

access_key = "xxxx"

access_secret = "xxxx"

#Pass our consumer key and consumer secret to Tweepy's user authentication handler

auth = tweepy.OAuthHandler(consumer_key, consumer_secret)

#Pass our access token and access secret to Tweepy's user authentication handler

auth.set_access_token(access_key, access_secret)

#Creating a twitter API wrapper using tweepy

api = tweepy.API(auth)

#This is what we are searching for

searchQuery = '#machinelearning OR "machine learning"'

#Maximum number of tweets we want to collect

maxTweets = 1

#The twitter Search API allows up to 100 tweets per query

tweetsPerQry = 100

#new_tweets = api.search(q=query,count=1)

tweetCount = 0

#Open a text file to save the tweets to

with open('test_ml1.json', 'w') as f:

#Tell the Cursor method that we want to use the Search API (api.search)

#Also tell Cursor our query, and the maximum number of tweets to return

for tweet in tweepy.Cursor(api.search,q=searchQuery,tweet_mode='extended').items(maxTweets) :

#Write the JSON format to the text file, and add one to the number of tweets we've collected

f.write(jsonpickle.encode(tweet._json, unpicklable=False) + '\n')

tweetCount += 1

#Display how many tweets we have collected

print("Downloaded {0} tweets".format(tweetCount))

I have a JSON file which you get when you download the tweets using Twitter API's. I have stored these tweets in a df using below code as the JSON has nested dictionaries(Loading a file with more than one line of JSON into Python's Pandas):

import pandas as pd

# read the entire file into a python array

with open('your.json', 'rb') as f:

data = f.readlines()

# remove the trailing "\n" from each line

data = map(lambda x: x.rstrip(), data)

# each element of 'data' is an individual JSON object.

# i want to convert it into an *array* of JSON objects

# which, in and of itself, is one large JSON object

# basically... add square brackets to the beginning

# and end, and have all the individual business JSON objects

# separated by a comma

data_json_str = "[" + ','.join(data) + "]"

# now, load it into pandas

data_df = pd.read_json(data_json_str)

Below is the code for dataframe to csv which I get:

data_df.to_csv("output_batch.csv",encoding = 'utf-8')

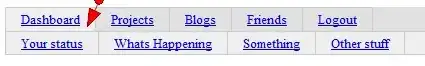

Below is the screenshot of columns in csv:

My question

I want to extract "Screen_name" from the "entities" column. In the below example it will be "NIRAV_88".