I'm trying to create a python SCRIPT that is capable of downloading a video stream. I can find the URL from the DevMode manually but I don't know how I could automate this finding process. The URL itself is not in the source of the webpage, a javascript file initiates a request to get the files.

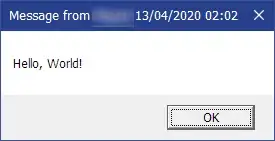

This is what I can see in Google Chrome DevTools.

I tried to work with "requests" but it appears to be mainly for sending requests, not catching ones that a site makes. Should I use something that simulates a browser, like selenium? What is the main technique used for this issue? Thank you in advance.