Like many others before me, I'm trying to run an AWS Lambda function and when I try to test it, I get

"errorMessage": "Unable to import module 'lambda_function'"

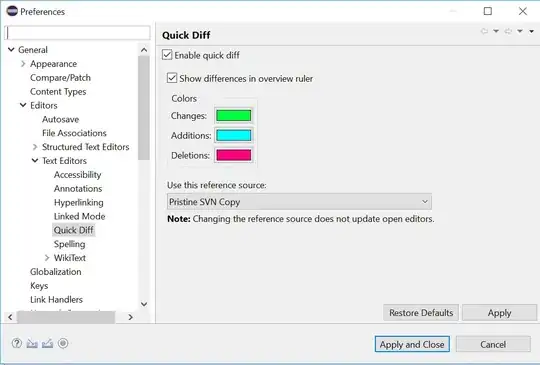

My Handler is set to lambda_function.lambda_handler, and I indeed have a file named lambda_function.py which contains a function called lambda_handler. Here's a screenshot as proof:

Everything was working fine when I was writing snippets of code inline in the included IDE, but when I zipped my full program with all of its dependencies and uploaded it, I got the above error.

I'm using the Numpy and Scipy packages, which are quite large. My zipped directory is 34 MB, and my unzipped directory 122 MB. I think this should be fine since the limit is 50 MB for a zipped directory. It appears to be uploading fine, since I see the message:

The deployment package of your Lambda function "one-shot-image-classification" is too large to enable inline code editing. However, you can still invoke your function right now.

I've seen that some posts solve this by using virtualenv, but I'm not familiar with that technology and I'm not sure how to use it properly.

I've also seen some posts saying that sometimes dependencies have dependencies and I may need to include those, but I'm not sure how to find this out.

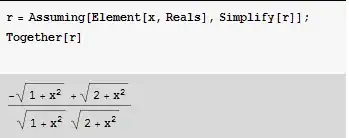

Here's the top portion of lambda_function.py, which should be enough to see the libraries I'm using and that I do indeed have a lambda_handler function:

import os

import boto3

import numpy as np

from scipy.ndimage import imread

from scipy.spatial.distance import cdist

def lambda_handler(event, context):

s3 = boto3.resource('s3')

Here a screenshot of the unzipped version of the directory I'm uploading:

I can also post the policy role that my Lambda is using if that could be an issue.

Any insight is much appreciated!

UPDATE:

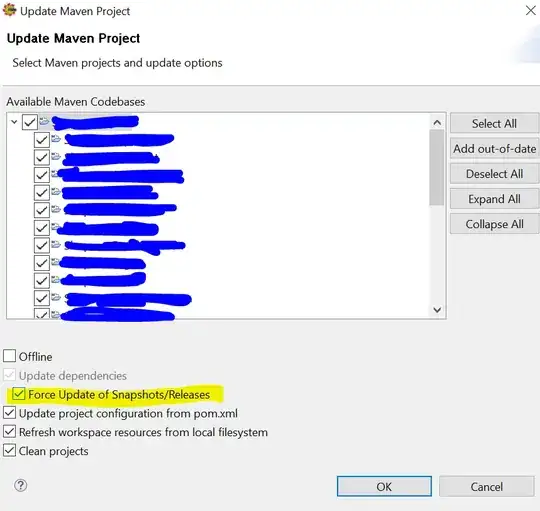

Here's one solution I tried:

1. git clone https://github.com/Miserlou/lambda-packages

2. create a folder in Documents called new_lambda

3. copy my lambda_function.py and the numpy folder from the lambda-packages into new_lambda, along with the scipy library that I compiled using Docker for AWS as per the article: https://serverlesscode.com/post/scikitlearn-with-amazon-linux-container/

4. Zip the new_lambda folder by right-clicking it and selecting 'compress'

My results:

Unable to import module 'lambda_function': No module named 'lambda_function'

To reiterate, my file is named lambda_function.py and contains a function called lambda_handler, which accepts two arguments (as seen above). This information matches that seen in Handler, also seen above.

I am using a Mac computer, if that matters.

UPDATE 2

If I follow the above steps but instead zip the files by directly selecting the files that I want to compress and then right clicking and selecting 'compress', I instead get the error

Unable to import module 'lambda_function': cannot import name 'show_config'

Also, the precompiled lambda-packages says that they are compiled for "at least Python 2.7", but my lambda runtime is 3.6. Could this be an issue?