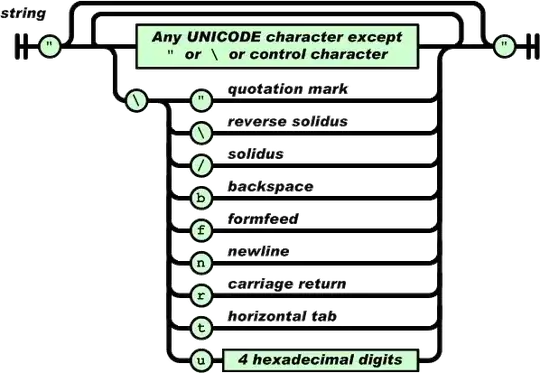

I've got a partitioned folder structure in the Azure Data Lake Store containing roughly 6 million json files (size couple of kb's to 2 mb). I'm trying to extract some fields from these files using Python code in Data Bricks.

Currently I'm trying the following:

spark.conf.set("dfs.adls.oauth2.access.token.provider.type", "ClientCredential")

spark.conf.set("dfs.adls.oauth2.client.id", "xxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxx")

spark.conf.set("dfs.adls.oauth2.credential", "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx")

spark.conf.set("dfs.adls.oauth2.refresh.url", "https://login.microsoftonline.com/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxx/oauth2/token")

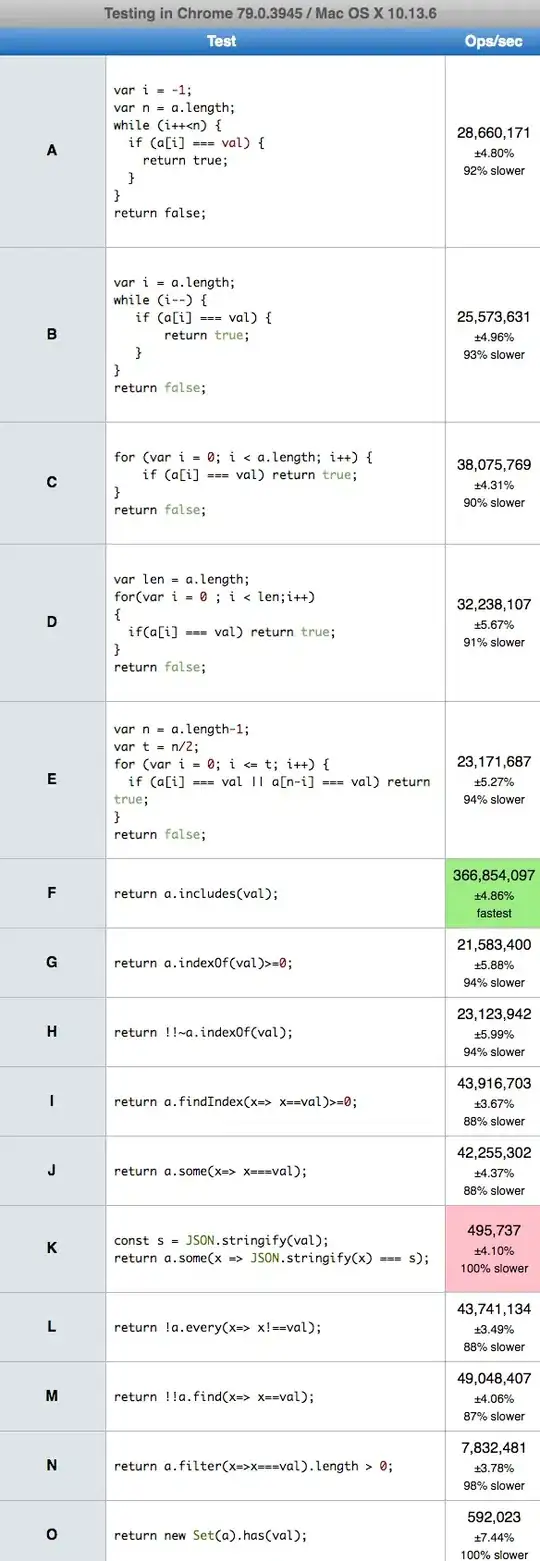

df = spark.read.json("adl://xxxxxxx.azuredatalakestore.net/staging/filetype/category/2017/*/")

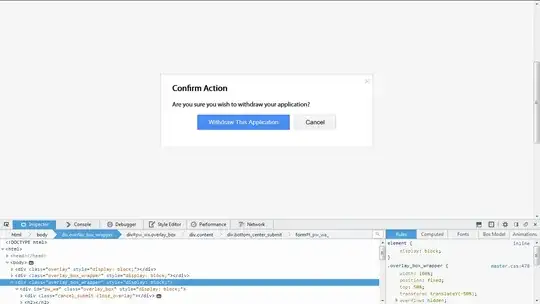

This example even reads only a part of the files since it points to "staging/filetype/category/2017/". It seems to work and there are some jobs starting when I run these commands. It's just very slow.

Job 40 indexes all of the subfolders and is relatively fast

Job 41 checks a set of the files and seems a bit to fast to be true

Then comes job 42, and that's where the slowness starts. It seems to do the same activities as job 41, just... slow

I have a feeling that I have a similar problem to this thread. But the speed of job 41 makes me doubtful. Are there faster ways to do this?