I was interested in preserving one side of a log sampled plot so I came up with this:

(downsample being my first attempt)

def downsample(x, y, target_length=1000, preserve_ends=0):

assert len(x.shape) == 1

assert len(y.shape) == 1

data = np.vstack((x, y))

if preserve_ends > 0:

l, data, r = np.split(data, (preserve_ends, -preserve_ends), axis=1)

interval = int(data.shape[1] / target_length) + 1

data = data[:, ::interval]

if preserve_ends > 0:

data = np.concatenate([l, data, r], axis=1)

return data[0, :], data[1, :]

def geom_ind(stop, num=50):

geo_num = num

ind = np.geomspace(1, stop, dtype=int, num=geo_num)

while len(set(ind)) < num - 1:

geo_num += 1

ind = np.geomspace(1, stop, dtype=int, num=geo_num)

return np.sort(list(set(ind) | {0}))

def log_downsample(x, y, target_length=1000, flip=False):

assert len(x.shape) == 1

assert len(y.shape) == 1

data = np.vstack((x, y))

if flip:

data = np.fliplr(data)

data = data[:, geom_ind(data.shape[1], num=target_length)]

if flip:

data = np.fliplr(data)

return data[0, :], data[1, :]

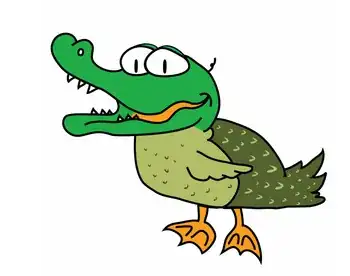

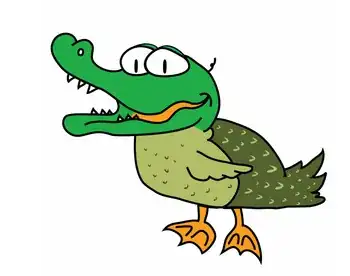

which allowed me to better preserve one side of plot:

newx, newy = downsample(x, y, target_length=1000, preserve_ends=50)

newlogx, newlogy = log_downsample(x, y, target_length=1000)

f = plt.figure()

plt.gca().set_yscale("log")

plt.step(x, y, label="original")

plt.step(newx, newy, label="downsample")

plt.step(newlogx, newlogy, label="log_downsample")

plt.legend()