EDIT:

My question is: rand()%N is considered very bad, whereas the use of integer arithmetic is considered superior, but I cannot see the difference between the two.

People always mention:

low bits are not random in

rand()%N,rand()%Nis very predictable,you can use it for games but not for cryptography

Can someone explain if any of these points are the case here and how to see that?

The idea of the non-randomness of the lower bits is something that should make the PE of the two cases that I show differ, but it's not the case.

I guess many like me would always avoid using rand(), or rand()%N because we've been always taught that it is pretty bad. I was curious to see how "wrong" random integers generated with c rand()%N effectively are. This is also a follow up to Ryan Reich's answer in How to generate a random integer number from within a range.

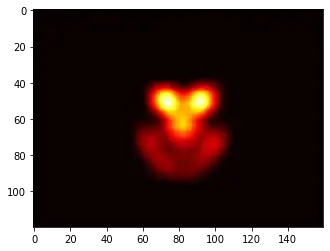

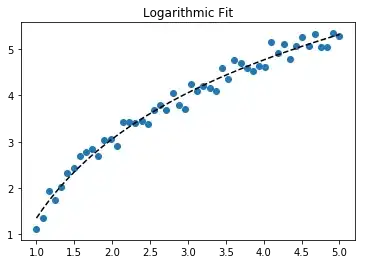

The explanation there sounds very convincing, to be honest; nevertheless, I thought I’d give it a try. So, I compare the distributions in a VERY naive way. I run both random generators for different numbers of samples and domains. I didn't see the point of computing a density instead of histograms, so I just computed histograms and, just by looking, I would say they both look just as uniform. Regarding the other point that was raised, about the actual randomness (despite being uniformly distributed). I — again naively —compute the permutation entropy for these runs, which are the same for both sample sets, which tell us that there's no difference between both regarding the ordering of the occurrence.

So, for many purposes, it seems to me that rand()%N would be just fine, how can we see their flaws?

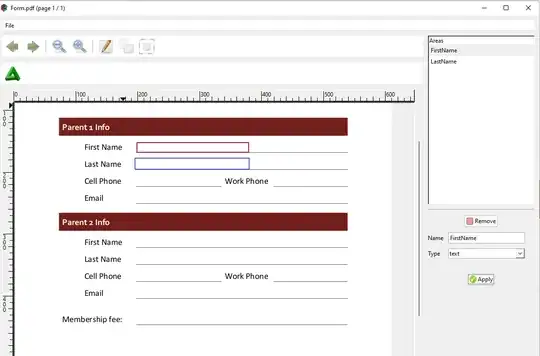

Here I show you a very simple, inefficient and not very elegant (but I think correct) way of computing these samples and get the histograms together with the permutation entropies. I show plots for domains (0,i) with i in {5,10,25,50,100} for different number of samples:

There's not much to see in the code I guess, so I will leave both the C and the matlab code for replication purposes.

#include <stdlib.h>

#include <stdio.h>

#include <time.h>

int main(int argc, char *argv[]){

unsigned long max = atoi(argv[2]);

int samples=atoi(argv[3]);

srand(time(NULL));

if(atoi(argv[1])==1){

for(int i=0;i<samples;++i)

printf("%ld\n",rand()%(max+1));

}else{

for(int i=0;i<samples;++i){

unsigned long

num_bins = (unsigned long) max + 1,

num_rand = (unsigned long) RAND_MAX + 1,

bin_size = num_rand / num_bins,

defect = num_rand % num_bins;

long x;

do {

x = rand();

}

while (num_rand - defect <= (unsigned long)x);

printf("%ld\n",x/bin_size);

}

}

return 0;

}

And here is the Matlab code to plot this and compute the PEs (the recursion for the permutations I took it from: https://www.mathworks.com/matlabcentral/answers/308255-how-to-generate-all-possible-permutations-without-using-the-function-perms-randperm):

system('gcc randomTest.c -o randomTest.exe;');

max = 100;

samples = max*10000;

trials = 200;

system(['./randomTest.exe 1 ' num2str(max) ' ' num2str(samples) ' > file1'])

system(['./randomTest.exe 2 ' num2str(max) ' ' num2str(samples) ' > file2'])

a1=load('file1');

a2=load('file2');

uni = figure(1);

title(['Samples: ' num2str(samples)])

subplot(1,3,1)

h1 = histogram(a1,max+1);

title('rand%(max+1)')

subplot(1,3,2)

h2 = histogram(a2,max+1);

title('Integer arithmetic')

as=[a1,a2];

ns=3:8;

H = nan(numel(ns),size(as,2));

for op=1:size(as,2)

x = as(:,op);

for n=ns

sequenceOcurrence = zeros(1,factorial(n));

sequences = myperms(1:n);

sequencesArrayIdx = sum(sequences.*10.^(size(sequences,2)-1:-1:0),2);

for i=1:numel(x)-n

[~,sequenceOrder] = sort(x(i:i+n-1));

out = sequenceOrder'*10.^(numel(sequenceOrder)-1:-1:0).';

sequenceOcurrence(sequencesArrayIdx == out) = sequenceOcurrence(sequencesArrayIdx == out) + 1;

end

chunks = length(x) - n + 1;

ps = sequenceOcurrence/chunks;

hh = sum(ps(logical(ps)).*log2(ps(logical(ps))));

H(n,op) = hh/log2(factorial(n));

end

end

subplot(1,3,3)

plot(ns,H(ns,:),'--*','linewidth',2)

ylabel('PE')

xlabel('Sequence length')

filename = ['all_' num2str(max) '_' num2str(samples) ];

export_fig(filename)