I found a piece of code in Chapter 7,Section 1 of deep Deep Learning with Python as follow:

from keras.models import Model

from keras import layers

from keras import Input

text_vocabulary_size = 10000

question_vocabulary_size = 10000

answer_vocabulary_size = 500

# Our text input is a variable-length sequence of integers.

# Note that we can optionally name our inputs!

text_input = Input(shape=(None,), dtype='int32', name='text')

# Which we embed into a sequence of vectors of size 64

embedded_text = layers.Embedding(64, text_vocabulary_size)(text_input)

# Which we encoded in a single vector via a LSTM

encoded_text = layers.LSTM(32)(embedded_text)

# Same process (with different layer instances) for the question

question_input = Input(shape=(None,), dtype='int32', name='question')

embedded_question = layers.Embedding(32, question_vocabulary_size)(question_input)

encoded_question = layers.LSTM(16)(embedded_question)

# We then concatenate the encoded question and encoded text

concatenated = layers.concatenate([encoded_text, encoded_question], axis=-1)

# And we add a softmax classifier on top

answer = layers.Dense(answer_vocabulary_size, activation='softmax')(concatenated)

# At model instantiation, we specify the two inputs and the output:

model = Model([text_input, question_input], answer)

model.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['acc'])

as you see this model's input don't have raw data's shape information, then after Embedding layer, the input of LSTM or the output of Embedding are some variable length sequence.

So I want to know:

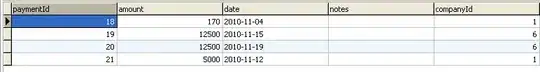

- in this model, how keras to determine the number of lstm_unit in LSTM layer

- how to deal with variable length sequence

Additional information: in order to explain what lstm_unit is (I don't know how to call it,so just show it image):