Instead of fine tuning multiple hyper-parameters,

is there any approach/method to analyze the following condition:

I use the pre-trained SSD-InceptionV2 from Google model zoo, and train with KITTI dataset for vehicle detection.

However, I wonder why the trained model cannot detect any vehicle in the 1st image but two vehicles in the 2nd image.

For example, is it caused by the scales/aspect ratios of default boxes or from feature extractor?

========== Edit ==========

For debugging, I mean

either

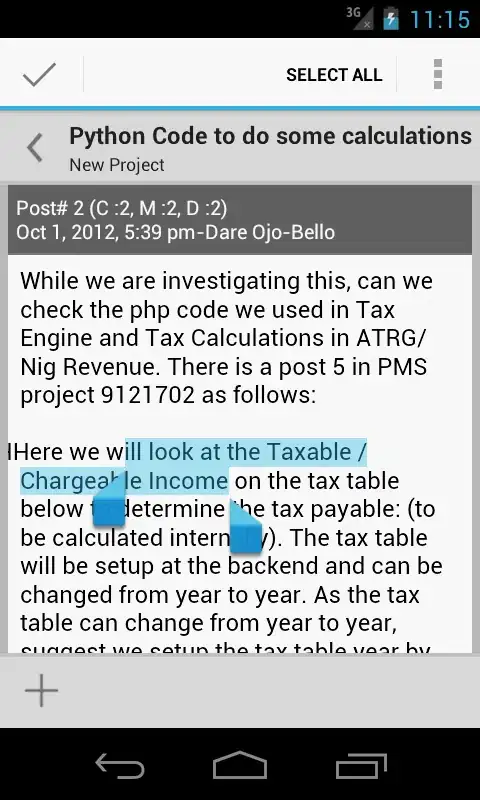

show the weight histogram in this discussion

or

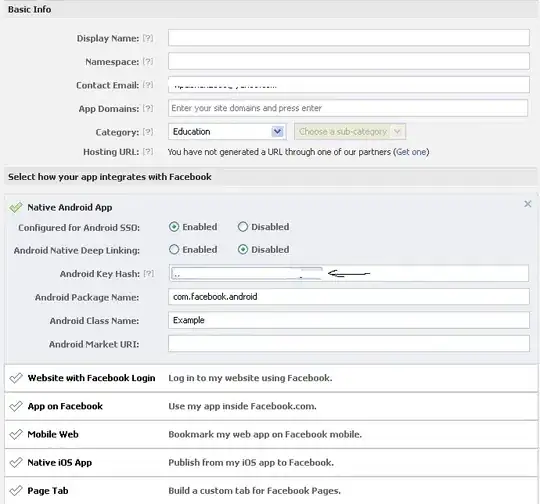

in Visualizing and Understanding Convolutional Networks

you can visualize the higher level layers like

Thank you for precious time on my question.