I am trying to insert multiple items in a hashtable and measure the insertion times in milisseconds. Basically, it works like this (this function belongs to the class of my hashtable):

double benchmark(int amountOfInsertions){

int valueToInsert;

timeval tv_timeStart, tv_timeEnd;

double totalTime = 0;

double db_timeStart, db_timeEnd;

for (int i = 0; i < amountOfInsertions; i++){

valueToInsert = generateRandomVariable();

gettimeofday(&tv_timeStart, NULL);

insert(valueToInsert);

gettimeofday(&tv_timeEnd, NULL);

db_timeStart = tv_timeStart.tv_sec*1000 + tv_timeStart.tv_usec/1000.0;

db_timeEnd = tv_timeEnd.tv_sec*1000 + tv_timeEnd.tv_usec/1000.0;

totalTime += (db_timeEnd - db_timeStart);

}

return totalTime;

}

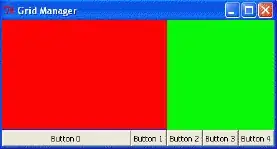

The problem is that the insertion times used to look like this, obviously showing a clear progression of times, the more items I inserted:

But now, I notice that the insertion times kind of alternate between the same values (around multiples of 15.625), creating extremely inaccurate results:

And it just started happening all of a sudden, even with old versions of my code that I know output correct times. Is it a particular problem with gettimeofday()? If not, what could it be?

This problem is so mysterious to me that even wonder if this is the right place of right way to ask about it.

UPDATE: I've also tried with clock() and std::chrono::steady_clock, as well as measuring the time of the whole loop instead of each individual insertion (example below), and still got the same behaviour:

double benchmark(int amountOfInsertions){

int valueToInsert;

double totalTime = 0;

steady_clock::time_point t1 = steady_clock::now();

for (int i = 0; i < amountOfInsertions; i++){

valueToInsert = generateRandomVariable();

insert(valueToInsert);

}

steady_clock::time_point t2 = steady_clock::now();

duration<double> time_span = duration_cast<duration<double>>(t2 - t1);

totalTime = time_span.count()*1000;

return totalTime;

}