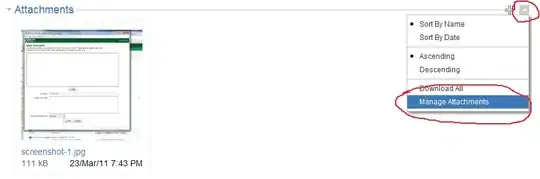

According to them, double and long double are identical in size and range (see the screenshot below). This contradicts these specs:

There's no contradiction here. If you've read the standard and the linked article carefully you'll see that the C standard specifies the minimum possible ranges of types and not some fixed sizes for them. Also note that the wiki link above is for C and not C++. They're very different languages. Fortunately they both agree on types' sizes

That allows for flexibility because C and C++ have always been designed for portability. The main philosophy is "you don’t pay for what you don’t use" so compiler implementations must choose what's best and efficient for each specific architecture. They can't force int to be always 32-bit long. For example on an 18-bit architecture int will have 18 bits instead of an awkward 32-bit size, and 16-bit CPUs won't have to waste cycles supporting 32-bit int if int is defined to be a 16-bit type

That's why C and C++ allow for 1's complement and sign-magnitude for signed integer types instead of only 2's complement. Floating-point types also aren't mandated to have fixed sizes or be in binary so implementations can use decimal floating-point types. In fact IEEE-754 is not even required because there were computers that use other floating-point formats. See Do any real-world CPUs not use IEEE 754?

So reading the ISO/IEC 9899:201x C++ standard, section 5.2.4.2.1 (Sizes of integer types <limits.h>) we know that:

The values given below shall be replaced by constant expressions suitable for use in #if preprocessing directives. […] Their implementation-defined values shall be equal or greater in magnitude (absolute value) to those shown, with the same sign

The value corresponding to INT_MAX is 32767 which means that INT_MAX >= 32767 in any conforming implementations. The same thing applies to floating-point types in section 5.2.4.2.2 (Characteristics of floating types <float.h>)

What you read in the standard means

- precisionOf(float) ⩽ precisionOf(double) ⩽ precisionOf(long double) and

- setOfValues(float) ⊂ setOfValues(double) ⊂ setOfValues(long double)

It's very clear and not vague at all. In fact you're only guaranteed to have

- CHAR_BIT ⩾ 8

- sizeof(char) ⩽ sizeof(short) ⩽ sizeof(int) ⩽ sizeof(long) ⩽ sizeof(long long)

- sizeof(float) ⩽ sizeof(double) ⩽ sizeof(long double)

- FLT_DECIMAL_DIG ⩾ 6

- LDBL_DECIMAL_DIG ⩾ DBL_DECIMAL_DIG ⩾ 10

So double is allowed to have the same size and precision as long double. In fact it's common for them to be the same type on non-x86 platforms because they don't have an extended precision type like x86. Recently many implementations have switched to a software-based IEEE-754 quadruple-precision for long double, but of course it'll be a lot slower

Because there are many possible formats for long double, there are also various options to choose the long double size, like when people don't need the extra precision or don't want the bad performance of a software implementation. For example

- GCC for x86 has

-mlong-double-64/80/128 depending on whether you want a 64-bit (i.e. same as double), 80-bit (extended precision) or 128-bit (quadruple-precision) long double. Similarly there are -m96/128bit-long-double options if you want to trade speed for 25% memory usage

- GCC for PowerPC has

-mabi=ibmlongdouble/ieeelongdouble for a double-double implementation or a standard quadruple-precision format which has a wider range and slightly better precision but is much slower

C++ has been around for a long time. Why is there still no solid common standard for this kind of stuff? Is it intentional for the sake of flexibility -- everyone decides for themselves what these data types will be

Exactly, as I said above, it's intentional for the sake of flexibility. You don't pay for the loss of performance and memory usage when you don't need the precision higher than double. The standard committee cares about every possible architectures out there, including some possibly dead ones1

In fact allowing higher long double sometimes lead to unexpected results when FLT_EVAL_METHOD = 2 because float and double operations are also done in higher precision, so the same expression at different points may have different results. Besides, it's impossible to vectorize the odd extended-precision long double math2 which results in even worse performance. Therefore even in x86 MS's cl.exe compiler completely disable the use of 80-bit long double

See also

1 See

2 See