I've used a good few programming languages over the years and I'm an armchair linguist and contributor to Wiktionary. I've been making some of my own tools to look up Wiktionary from the commandline but I've run into a surprising problem.

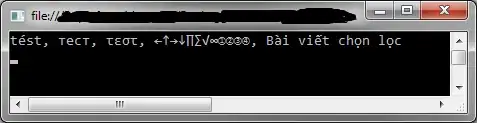

Neither Perl nor Python can output Unicode to the console natively under both *nix and Windows (though there are various workarounds). The main reason is that *nix OSes like their Unicode in UTF-8 and Windows likes its Unicode in UTF-16. But it also seems that Windows makes it very difficult to use wide characters with the console even though both the console and wprintf are wide character native.

So the question is, is the situation any better if I look beyond these languages into Java, C#, Scala, etc. Or are there any scripting languages which started out on Windows and were then ported to *nix?

Here is some ideal pseudocode:

function main()

{

print( L"hello, 世界" );

}