I am attempting to read multiple CSV files into a Pandas data frame. The CSVs aren't comma separated - the fields are delimited by a semicolon ";".

I based my code on the answers here.

My data is all in a specific subdirectory: /data/luftdaten/5331

This is what I run:

import glob

import pandas as pd

path =r'data/luftdaten/5331' # use your path

filenames = glob.glob(path + "/*.csv")

count_files = 0

dfs = []

for filename in filenames:

if count_files ==0:

dfs.append(pd.read_csv(filename, sep=";"))

count_files += 1

else:

dfs.append(pd.read_csv(filename, sep=";", skiprows=[0]))

count_files +=1

big_frame = pd.concat(dfs, ignore_index=True)

I use count_files to monitor if it is the first CSV - in which case I import the headers. Otherwise, it skips the headers.

The code executes OK.

If I run it with a single file in that directory, everything is fine:

big_frame.info()

Output:

RangeIndex: 146 entries, 0 to 145 Data columns (**total 12 column**s): sensor_id 146 non-null int64 sensor_type 146 non-null object etc......

If I run it with 2 or more files in the directory things go wrong from the start.

Output with 4 files:

RangeIndex: 1893 entries, 0 to 1892 Data columns (total **33 columns**): -2.077 1164 non-null float64 -2.130 145 non-null float64 2.40 145 non-null float64

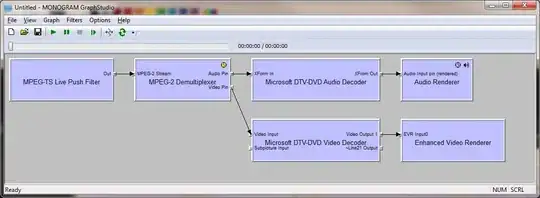

Running big_frame.head() on the single CSV version gives this, with the correct column names:

While running the same with four files imported gives me this:

Is there anything obvious that I am doing which is causing not only the number of rows to grow but the columns too?

Your guidance would be gratefully appreciated!