I was using rand() function to generate pseudo-random numbers between 0,1 for a simulation purpose, but when I decided to make my C++ code run in parallel (via OpenMP) I noticed rand() isn't thread-safe and also is not very uniform.

So I switched to using a (so-called) more uniform generator presented in many answers on other questions. Which looks like this

double rnd(const double & min, const double & max) {

static thread_local mt19937* generator = nullptr;

if (!generator) generator = new mt19937(clock() + omp_get_thread_num());

uniform_real_distribution<double> distribution(min, max);

return fabs(distribution(*generator));

}

But I saw many scientific errors in my original problem which I was simulating. Problems which were both against the results from rand() and also against common sense.

So I wrote a code to generate 500k random numbers with this function, calculate their average and do this for 200 times and plot the results.

double SUM=0;

for(r=0; r<=10; r+=0.05){

#pragma omp parallel for ordered schedule(static)

for(w=1; w<=500000; w++){

double a;

a=rnd(0,1);

SUM=SUM+a;

}

SUM=SUM/w_max;

ft<<r<<'\t'<<SUM<<'\n';

SUM=0;

}

We know if instead of 500k I could do it for infinite times it should be a simple line with value 0.5. But with 500k we will have fluctuations around 0.5.

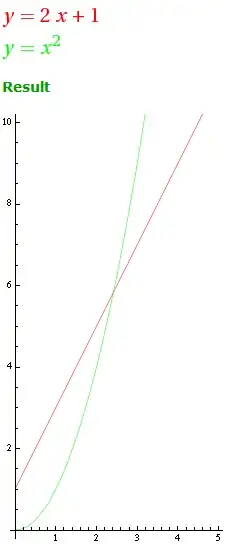

When running the code with a single thread, the result is acceptable:

But here is the result with 2 threads:

3 threads:

4 threads:

I do not have my 8 threaded CPU right now but the results were even worth there.

As you can see, They are both not uniform and very fluctuated around their average.

So is this pseudo-random generator thread-unsafe too?

Or am I making a mistake somewhere?