I'm trying to understand the relationship between a simple Perceptron and a neural network one gets when using the keras Sequence class.

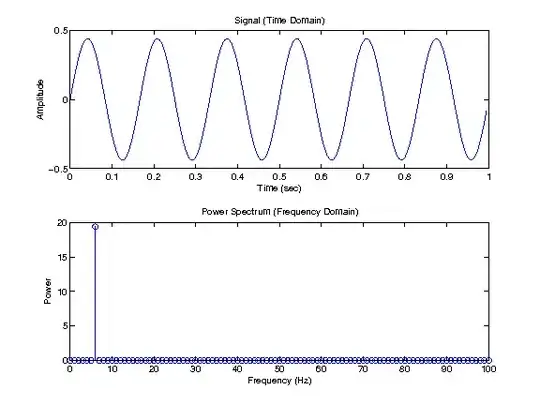

I learned that the neural network perceptron looks as such:

Each "node" in the first layer is one of the features of a sample x_1, x_2,...,x_n

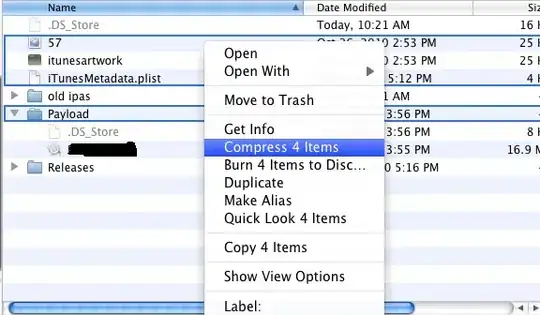

Could somebody explain the jump to the neural network I find in the Keras package below?

Since the input layer has four nodes, does that mean that network consists of four of the perceptron networks?