I am using Zeppelin to read avro files of size in GBs and have records in billions. I have tried with 2 instances and 7 instances on AWS EMR, but the performance seems equal. With 7 instances it is still taking alot of times. The code is:

val snowball = spark.read.avro(snowBallUrl + folder + prefix + "*.avro")

val prod = spark.read.avro(prodUrl + folder + prefix + "*.avro")

snowball.persist()

prod.persist()

val snowballCount = snowball.count()

val prodCount = prod.count()

val u = snowball.union(prod)

Output:

snowballCount: Long = 13537690

prodCount: Long = 193885314

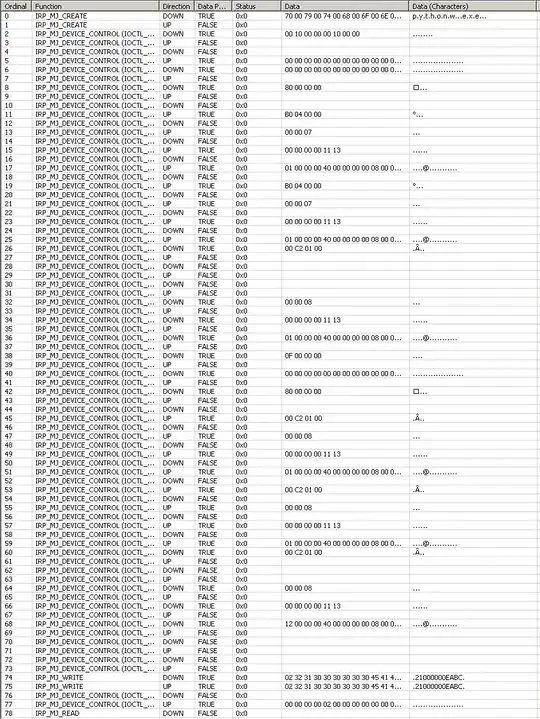

And the resources can be seen here:

The spark.executor.cores is set to 1. If i try to change this number, the Zeppelin doesn't work and spark context shutdown. It would be great, if someone can hint a bit to improve the performance.

Edit: I checked how many partitions it created:

snowball.rdd.partitions.size

prod.rdd.partitions.size

u.rdd.partitions.size

res21: Int = 55

res22: Int = 737

res23: Int = 792