Leading up to the question

I have a 2D complex valued image with a short series of values. I want to cluster similar pixels / segment the image. There is one more or less static image with a superimposed image that has some blobs in it that have a changing value (mostly the angle of the complex number) over the short series. They are also slightly discernable in the norm of the image.

My first attempt was k-means, but that really clustered according to the means (there is a distinction in mean values, especially compared to surrounding pixels, but the temporal and angular information is greater). My second attempt was ICA and then look at the k components with the largest magnitude, and that did successfully identify certain regions in my image as being different, but did not identify the group of pixels I was interested in (visually it is not hard to recognize them, but they are small).

Current situation

So because my first two tries did not work out, I looked around with google and it seemed spectral clustering might be appropriate. But I have some serious issues when using the method, mostly to do with limited available memory. I then thought, since I have so many pixels, I can just apply spectral clustering to seperate slabs of the data.

Someone here suggests clustering slabs first and then combine them, he then says 'at the end you will have the problem of recombining them and this problem can be solved easily'. The bits designated as 'easy' in explanations are hardly ever easy of course. He links to this paper, but that method does not process all the data in slabs. It rather excludes vectors that are not close to a principal component.

Question

My question has 2 parts:

1. How do I combine the results for the seperate segments? The eigenvectors are different and the cluster numbers are different. The result looks like it worked in the seperate slabs.

2. No distance / affinity between pixels in seperate slabs is taken into account. Can I make 'slabs between slabs'? For those slabs L and A are not symmetric, no clue how to perform the method then. Perhaps I can somehow compare / merge all eigenvectors at the end?

(3. Is there a similar or better method that does not need so much memory. Computation time is also borderline acceptable, easily exploding)

Matlab code example

%% generate data

% get some outer region without data

tempdisk = strel('disk',922/2); tempdisk = double(repmat((1+sqrt(-1)).*tempdisk.Neighborhood,[1 1 15]));

% make some noise

tempnoise = (rand(921,921,15)+sqrt(-1).*rand(921,921,15))./10;

% 'background signal'

tempim1 = double(imresize(mean(imread('cameraman.tif'),3),[921,921])); tempim1 = repmat(tempim1./max(tempim1(:)),[1 1 15]);

% 'target signal'

tempim2 = double(rgb2hsv(imread('fabric.png'))); tempim2 = imresize(tempim2(:,:,2),[921,921]); tempim2 = repmat(tempim2./max(tempim2(:)),[1 1 15]);

sin1 = repmat(permute(sin(2.*pi.*(0:14)./15),[1 3 2]),[921 921 1]);

% combine into data

complexdata = (sin1.*(1.0.*tempim1+0.5.*tempim2.^2).*exp(-sqrt(-1).*2.*pi.*sin1.*(tempim2.^2)).*tempdisk+tempnoise)./1.5;

%this is what the mean data looks like

meannorm = mean(abs(complexdata),3);

meanangle = mean(angle(complexdata),3);

figure; subplot(1,2,1); imshow(meannorm,[]); title('mean norm'); subplot(1,2,2); imshow(meanangle,[]); title('mean angle')

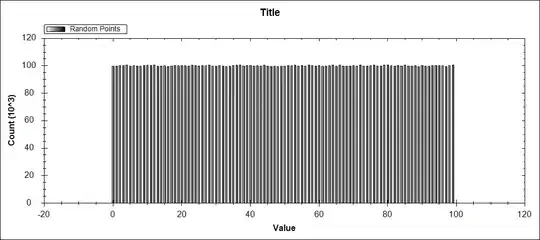

This is what the generated data looks like:

The bright blobs in the right image are what Im after. They have the strongest variation over time as well (and are correlated in time).

Then to set up the clustering:

%% perform spectral clustering in seperate slabs

% method from http://ai.stanford.edu/~ang/papers/nips01-spectral.pdf

%get all pixel vectors in a single matrix

complexrows = reshape(permute(complexdata, [3,1,2]), [15, 921*921]);

%k means and eigs dont accept complex, so convert to real here?

complexrowsTranspose = [real(complexrows);imag(complexrows)]';

%lets say 10000 by 10000 matrices are still ok

npix = 10000;

nslabpix = floor(length(complexrowsTranspose)/npix);

nrestpix = rem(length(complexrowsTranspose), npix);

Perform spectral clustering in slabs that fit into memory:

% spectral clustering

keig = 50;%how many eigenvectors needed? more is better

affinity_sigma = 1;% i dont understand how to calculate this from the paper

tic

% start with last slab (dynamically preallocate)

for islabpix = (nslabpix+1):-1:1;

%print progress

islabpix/nslabpix

toc

if islabpix>nslabpix

pixrange = (1:nrestpix) + ((islabpix-1)*npix);;

else

pixrange = (1:npix) + ((islabpix-1)*npix);

end

%calculate affinity between all voxels in slab

Aff = exp( -squareform(pdist(complexrowsTranspose(pixrange,:))).^2/(2*affinity_sigma^2) ); % affinity matrix

%calculate degree matrix for normalization

Dsq = sparse(size(Aff,1),size(Aff,2)); %degree matrix

for idiag=1:size(Aff,1)

Dsq(idiag,idiag) = sum(Aff(idiag,:))^(1/2);

end

%normalize affinity matrix

Lap = Dsq * Aff * Dsq; %normalize affinity matrix

%calculate eigenvectors of affinity matrix

[eigVectors(pixrange,1:keig), eigValues] = eigs(Lap, keig); %eigenvectors of normalized aff mat

normEigVectors(pixrange,1:keig) = eigVectors(pixrange,1:keig)./repmat(sqrt(sum(abs(eigVectors(pixrange,1:keig)).^2,2)), [1 keig]); %normalize rows of eigen vectors, normr only works on real numbers

% perform k means clustering on weights for eigenvectors

[idx,C,sumd,D] = kmeans([real(normEigVectors(pixrange,1:keig)),imag(normEigVectors(pixrange,1:keig))], 5); %k means on normalized eigenvecotrs

idxval(pixrange) = idx;

end

%reshape into image

idxim = reshape(idxval, [921, 921]);

figure; imshow(idxim,[])

toc

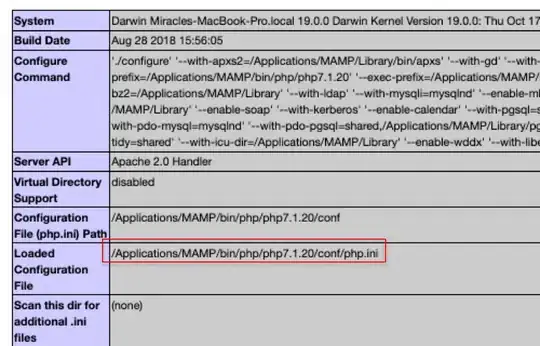

The resulting clustering:

The result looks like the method is working to some degree within each slab; the goal was to cluster all blobs with slightly higher norm and stronger angle variation (high saturation blobs from tempim2), which seem recognizable in the result. Now its mostly the seperate slabs that are the issue and that there are no cross-slab clusters. This took my computer about 15 minutes. I reduced the number of eigenvalues and the image size for this example so it runs in an acceptable amount of time. I think that illustrates part of my problem.