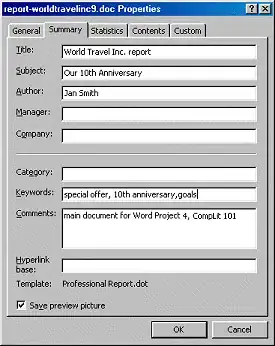

One of the query is causing my Postgres to freeze and it also results in some weird behaviour such as increased read/write IOPS and db eating all the space on the device. Here's some graphs which demonstrate the same.

Before deleting the query

After deleting the query

Any idea why is this happening?