I would like to use reduce and accumulate functions in Pandas in a way similar to how they apply in native python with lists. In itertools and functools implementations, reduce and accumulate (sometimes called fold and cumulative fold in other languages) require a function with two arguments. In Pandas, there is no similar implementation. The function takes two parameters: f(accumulated_value,popped_value)

So, I have a list of binary variables and want to calculate the number of duration when we are in the 1 state:

In [1]: from itertools import accumulate

import pandas as pd

drawdown_periods = [0,1,1,1,0,0,0,1,1,1,1,0,1,1,0]

applying accumulate to this with the lambda function

lambda x,y: (x+y)*y

gives

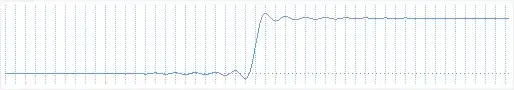

In [2]: list(accumulate(drawdown_periods, lambda x,y: (x+y)*y))

Out[2]: [0, 1, 2, 3, 0, 0, 0, 1, 2, 3, 4, 0, 1, 2, 0]

counting the length of each drawdown_period.

Is there is a smart but quirky way to supply a lambda function with two arguments? I may be missing a trick here.

I know that there is a lovely recipe with groupby (see StackOverflow How to calculate consecutive Equal Values in Pandas/How to emulate itertools.groupby with a series/dataframe). I'll repeat it since it's so lovely:

In [3]: df = pd.DataFrame(data=drawdown_periods, columns=['dd'])

df['dd'].groupby((df['dd'] != df['dd'].shift()).cumsum()).cumsum()

Out[3]:

0 0

1 1

2 2

3 3

4 0

5 0

6 0

7 1

8 2

9 3

10 4

11 0

12 1

13 2

14 0

Name: dd, dtype: int64

This is not the solution I want. I need a way of passing a two-parameter lambda function, to a pandas-native reduce/accumulate functions, since this will also work for many other functional programming recipes.