Summing up:

Your approach with renaming the columns is already smart as the docs says:

Examples

Assembling a datetime from multiple columns of a DataFrame. The keys

can be common abbreviations like [‘year’, ‘month’, ‘day’, ‘minute’,

‘second’, ‘ms’, ‘us’, ‘ns’]) or plurals of the same

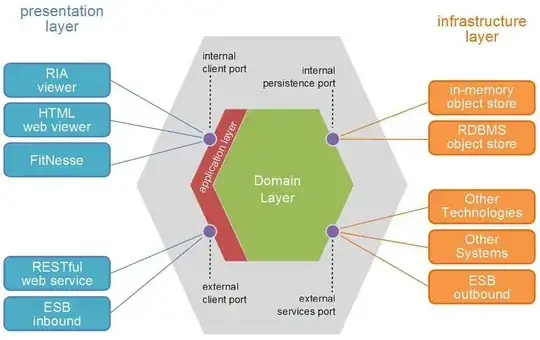

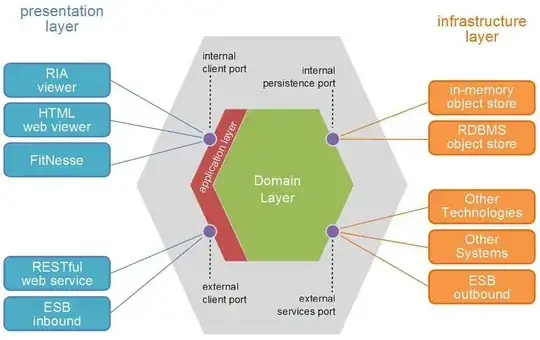

But there are some alternatives. In my experience the list comprehension using zip is quite fast (for small sets). With around 3000 rows of data renaming the columns becomes the quickest. Looking at the graph the penalty for renaming is hard for small set but compensates for large ones.

Alternatives

pd.to_datetime(['-'.join(map(str,i)) for i in zip(df['h3'],df['h2'],df['h1'])])

pd.to_datetime(['-'.join(i) for i in df[['h3', 'h2', 'h1']].values.astype(str)])

df[['h3','h2','h1']].astype(str).apply(lambda x: pd.to_datetime('-'.join(x)), 1)

pd.to_datetime(df[['h1','h2','h3']].rename(columns={'h1':'day', 'h2':'month','h3':'year'}))

Timings Win10:

#df = pd.concat([df]*1000)

2.74 ms ± 33.7 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

8.08 ms ± 158 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

158 ms ± 472 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)

2.64 ms ± 104 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

Timings MacBook Air:

100 loops, best of 3: 6.1 ms per loop

100 loops, best of 3: 12.7 ms per loop

1 loop, best of 3: 335 ms per loop

100 loops, best of 3: 4.7 ms per loop

Update with code I wrote (happy if you have suggestions to improve or any library that could help):

import pandas as pd

import numpy as np

import timeit

import matplotlib.pyplot as plt

from collections import defaultdict

df = pd.DataFrame({

'h1': np.arange(1,11),

'h2': np.arange(1,11),

'h3': np.arange(2000,2010)

})

myfuncs = {

"pd.to_datetime(['-'.join(map(str,i)) for i in zip(df['h3'],df['h2'],df['h1'])])":

lambda: pd.to_datetime(['-'.join(map(str,i)) for i in zip(df['h3'],df['h2'],df['h1'])]),

"pd.to_datetime(['-'.join(i) for i in df[['h3','h2', 'h1']].values.astype(str)])":

lambda: pd.to_datetime(['-'.join(i) for i in df[['h3','h2', 'h1']].values.astype(str)]),

"pd.to_datetime(df[['h1','h2','h3']].rename(columns={'h1':'day','h2':'month','h3':'year'}))":

lambda: pd.to_datetime(df[['h1','h2','h3']].rename(columns={'h1':'day','h2':'month','h3':'year'}))

}

d = defaultdict(dict)

step = 10

cont = True

while cont:

lendf = len(df); print(lendf)

for k,v in mycodes.items():

iters = 1

t = 0

while t < 0.2:

ts = timeit.repeat(v, number=iters, repeat=3)

t = min(ts)

iters *= 10

d[k][lendf] = t/iters

if t > 2: cont = False

df = pd.concat([df]*step)

pd.DataFrame(d).plot().legend(loc='upper center', bbox_to_anchor=(0.5, -0.15))

plt.yscale('log'); plt.xscale('log'); plt.ylabel('seconds'); plt.xlabel('df rows')

plt.show()

Returns: