AREnvironmentProbeAnchor (works in iOS 12.0+) is an anchor for image-based lighting technology. Model's PBR shader can reflect a light from its surroundings. The principle is simple: 6 square images from sources go to the env reflectivity channel of a shading material. These six sources (a rig) have the following directions: +x/-x, +y/-y, +z/-z. The image below illustrates 6 directions of the rig:

Adjacent zFar planes look like a Cube, don't they?

Texture's patches will be available in definite places where your camera scanned the surface. ARKit uses advanced machine learning algorithms to cover a cube with complete 360 degrees textures.

AREnvironmentProbeAnchor serves for positioning this photo-rig at a specific point in the scene. All you have to do is to enable environment texture map generation in an AR session. There are two options for this:

ARWorldTrackingConfiguration.EnvironmentTexturing.manual

With manual environment texturing, you identify points in the scene for which you want light probe texture maps by creating AREnvironmentProbeAnchor objects and adding them to the session.

ARWorldTrackingConfiguration.EnvironmentTexturing.automatic

With automatic environment texturing, ARKit automatically creates, positions, and adds AREnvironmentProbeAnchor objects to the session.

In both cases, ARKit automatically generates environment textures as the session collects camera imagery. Use a delegate method such as session(_:didUpdate:) to find out when a texture is available, and access it from the anchor's environmentTexture property.

If you display AR content using ARSCNView and the automaticallyUpdatesLighting option, SceneKit automatically retrieves AREnvironmentProbeAnchor texture maps and uses them to light the scene.

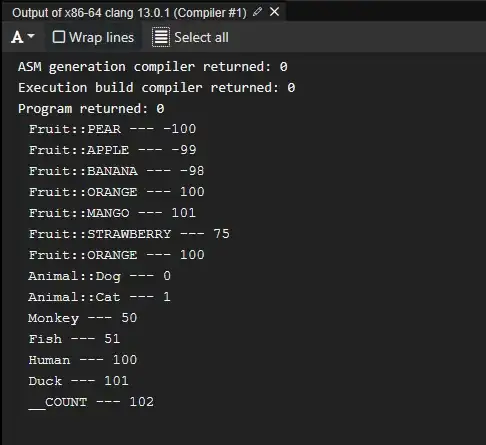

Here's how your code in ViewController.swift may look like:

sceneView.automaticallyUpdatesLighting = true

let torusNode = SCNNode(geometry: SCNTorus(ringRadius: 2, pipeRadius: 1.5))

sceneView.scene.rootNode.addChildNode(torusNode)

let reflectiveMaterial = SCNMaterial()

reflectiveMaterial.lightingModel = .physicallyBased

reflectiveMaterial.metalness.contents = 1.0

reflectiveMaterial.roughness.contents = 0

reflectiveMaterial.diffuse.contents = UIImage(named: "brushedMetal.png")

torusNode.geometry?.firstMaterial = [reflectiveMaterial]

let config = ARWorldTrackingConfiguration()

if #available(iOS 12.0, *) {

config.environmentTexturing = .automatic // magic happens here

}

sceneView.session.run(config)

Then use a session(...) instance method:

func session(_ session: ARSession, didUpdate anchors: [ARAnchor]) {

guard let envProbeAnchor = anchors.first as? AREnvironmentProbeAnchor

else { return }

print(envProbeAnchor.environmentTexture)

print(envProbeAnchor.extent)

}