We're currently using Flask RQ along with Flask SQLAlchemy and running into some performance problems. Here's our high level architecture:

- API endpoint is hit

- Time consuming tasks get queued into RQ

- RQ worker forks a new process to perform the job

- The job typically consists of a database query via Flask SQLAlchemy + additional processing

When looking at the performance of (4) using cProfile, I see

1 5.7e-05 5.7e-05 4.064 4.064 __init__.py:496(__get__)

535/1 0.002901 0.002901 3.914 3.914 base.py:389(_inspect_mapped_class)

1 0.001281 0.001281 3.914 3.914 mapper.py:2782(configure_mappers)

462/1 0.000916 0.000916 3.914 3.914 base.py:404(class_mapper)

1 1.4e-05 1.4e-05 3.914 3.914 mapper.py:1218(_configure_all)

59 0.01247 0.0002113 3.895 0.06601 mapper.py:1750(_post_configure_properties)

985/907 0.01748 1.927e-05 3.29 0.003627 interfaces.py:176(init)

235/157 0.00914 5.822e-05 3.162 0.02014 relationships.py:1650(do_init)

...

And

I see a lot of time being spent in SQLAlchemy; and I assume that is some overhead that maps the SQL data to the ORM object. So, I have a two questions:

- Is the amount of time spent to initialize the SQL-ORM mapping expected? I'm running on AWS xlarge instances at 70% CPU. All my relationships are loaded dynamically using

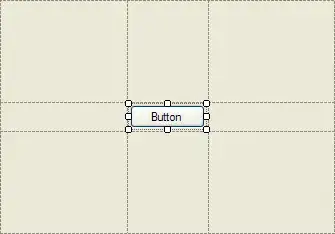

lazy='dynamic'and the corresponding query takes < 10 milliseconds, according to pg_stat_statements. - Assuming there's no way to work around (1), another way to avoid the constant overhead is to have a queue like this. So, instead of forking a new process for every job, the job runs in the thread directly. Is this advisable for distributed systems? I wasn't able to find a framework that did this so maybe it isn't a good idea?

Last note, if I'm being stupid and not seeing an obvious solution, please let me know!