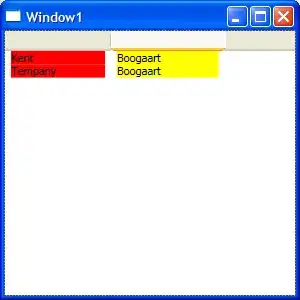

I have each character as a Mat object which are of different sizes. Some sample images are,

I am trying to convert them to an Image using PIL and then into a standard 12x12 matrix which will be flattened into 144 column 1D array. The code I am using is as below, after suggestion

#roi is a Mat Object

images = cv2.resize(roi,(12,12))

myImage = Image.fromarray(images)

withoutReshape = np.array(myImage.getdata()) #Corresponding output attached

print(withoutReshape)

withReshape = np.array(myImage.getdata()).reshape(myImage.size[0], myImage.size[1], 3)

print(withReshape) #Corresponding output attached

unable to find the significance of using reshape. Also, how can I flatten the matrix into an array after using resize