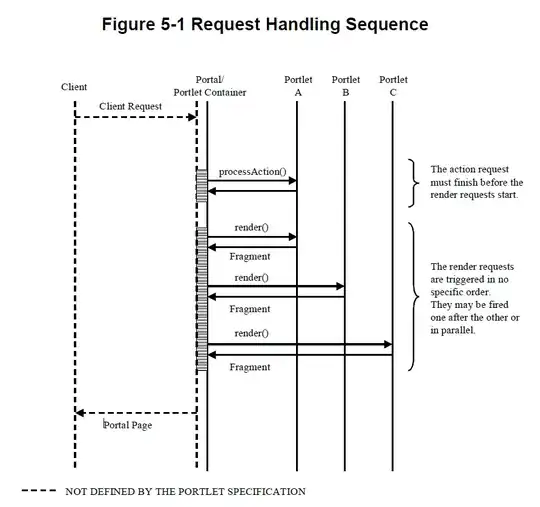

I've got this image which is massive but 1 square represents a number of pixel values but I want an image that has only 1 pixel with a particular value. The squares are not all the same size.

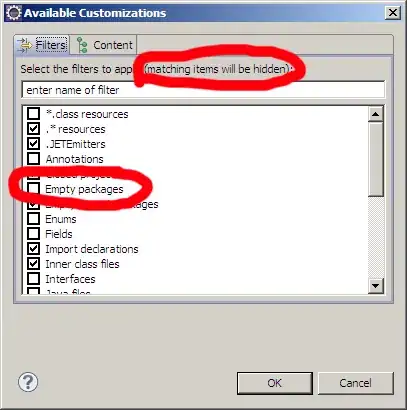

Some of the columns are narrower and some are wider. This is the example which is part of the big image:

As you can see the squares on the left hand side is bigger than the one on the right handside. That's the problem!

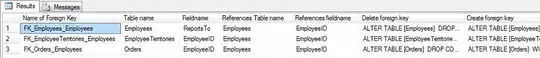

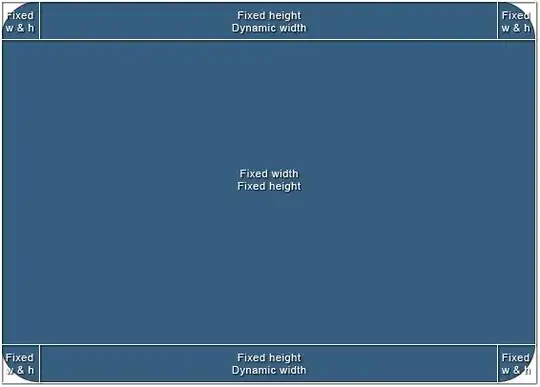

Actual image:

For example, using the code below, when I try to convert my image to a smaller pixel by pixel one, I get this, which is completely different to the initial picture.

from PIL import Image

import numpy as np

img = Image.open('greyscale_intense.png').convert('L') # convert image to 8-bit grayscale

WIDTH, HEIGHT = img.size

a = list(img.getdata()) # convert image data to a list of integers

# convert that to 2D list (list of lists of integers)

a = np.array ([a[offset:offset+WIDTH] for offset in range(0, WIDTH*HEIGHT, WIDTH)])

print " "

print "Intial array from image:" #print as array

print " "

print a

rows_mask = np.insert(np.diff(a[:, 0]).astype(np.bool), 0, True)

columns_mask = np.insert(np.diff(a[0]).astype(np.bool), 0, True)

b = a[np.ix_(rows_mask, columns_mask)]

print " "

print "Subarray from Image:" #print as array

print " "

print b

print " "

print "Subarray from Image (clearer format):" #print as array

print " "

for row in b: #print as a table like format

print(' '.join('{:3}'.format(value) for value in row))

img = Image.fromarray(b, mode='L')

img.show()

What I've done in the code is create an array from the initial image and then by ignoring an repeated values, create a subarray that has no repeated values. The new image was constructed using that.

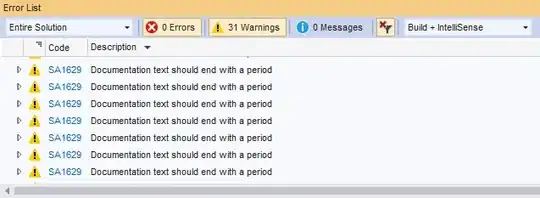

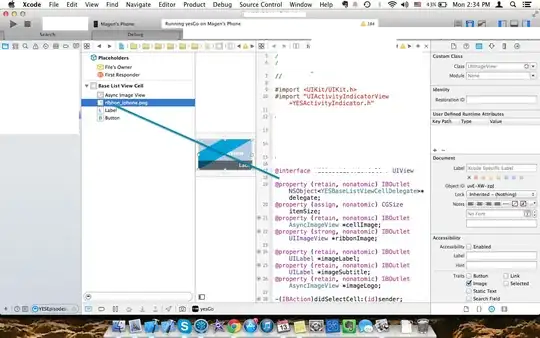

For example for this image:

The result I get is:

As you can see from the array 38 is repeated 9 times while 27 is repeated 8 times...

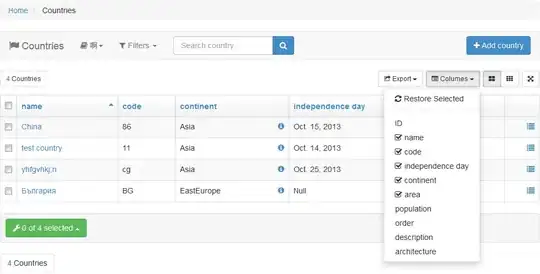

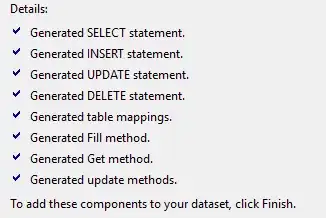

My final aim is to do the same process for a coloured RGB image as shown here.

Please help!