I get several app icons and resize to 36*36. I hope to get similarity between any two of them. I have made them black and white with opencv function threshold. I follow instruction from other questions. I apply matchTemplate with method TM_CCOEFF_NORMED on two icons but get a negative result, which makes me confused.

Based on doc there should not be any negative number in result array. Could anyone explain to me that why I get a negative number and does this negative make sense?

I failed one hour for trying edit my post with code indent error, even if I remove all code part from my edit. That's crazy. I have tried both grayscale and black&white of icon. When two icons are quite different, I will always get negative result.

If I use original icon with size 48*48, thing goes well. I don't know whether it is related with my resize step.

#read in pics

im1 = cv2.imread('./app_icon/pacrdt1.png')

im1g = cv2.resize(cv2.cvtColor(im1, cv2.COLOR_BGR2GRAY), (36, 36), cv2.INTER_CUBIC)

im2 = cv2.imread('./app_icon/pacrdt2.png')

im2g = cv2.resize(cv2.cvtColor(im2, cv2.COLOR_BGR2GRAY), (36, 36), cv2.INTER_CUBIC)

im3 = cv2.imread('./app_icon/mny.png')

im3g = cv2.resize(cv2.cvtColor(im3, cv2.COLOR_BGR2GRAY), (36, 36), cv2.INTER_CUBIC)

#black&white convert

(thresh1, bw1) = cv2.threshold(im1g, 128 , 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

(thresh3, bw3) = cv2.threshold(im3g, 128 , 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

(thresh2, bw2) = cv2.threshold(im2g, 128 , 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

#match template

templ_match = cv2.matchTemplate(im1g, im3g, cv2.TM_CCOEFF_NORMED)[0][0]

templ_diff = 1 - templ_match

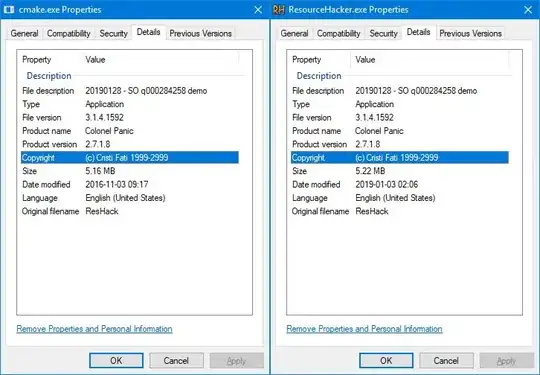

sample:

edit2: I define icons with different background color or font color as quite similar ones(but viewer will know they are quite same like image 1 and 2 in my sample). That the reason why I input icon picture as black&white. Hope this make sense.