I would like to have the coordinates of the corners of a rectangle object from a greyscale image with some noise.

I start with this image https://i.stack.imgur.com/cLADI.jpg. The central region has a checkered rectangle with different grey intensities. What i want is coordinates of the rectangle in green https://i.stack.imgur.com/YWZhc.jpg.

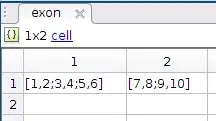

With below code:

im = cv2.imread("opencv_frame_0.tif",0)

data = np.array(im)

edg = cv2.Canny(data, 120, 255)

ret,thresh = cv2.threshold(data,140,255,1)

imshow(thresh,interpolation='none', cmap=cm.gray)

I am able to get https://i.stack.imgur.com/3vpPT.jpg. Which looks quite good but I don't know how to efficiently get the corner coordinates central white frame. I will have other images like this later where the central grey rectangle can be of a different size so I want the code to be optimized to work for that future.

I tried other examples from OpenCV - How to find rectangle contour of a rectangle with round corner? and OpenCV/Python: cv2.minAreaRect won't return a rotated rectangle. The last one gives me https://i.stack.imgur.com/jcmA5.jpg with best settings.

Any help is appreciated! Thanks.