I am trying to upload many (3 for now) files in parallel using XMLHttpRequest. If have some code that pulls them from a list of many dropped files and makes sure that at each moment I am sending 3 files (if available).

Here is my code, which is standard as far as I know:

var xhr = item._xhr = new XMLHttpRequest();

var form = new FormData();

var that = this;

angular.forEach(item.formData, function(obj) {

angular.forEach(obj, function(value, key) {

form.append(key, value);

});

});

form.append(item.alias, item._file, item.file.name);

xhr.upload.onprogress = function(event) {

// ...

};

xhr.onload = function() {

// ...

};

xhr.onerror = function() {

// ...

};

xhr.onabort = function() {

// ...

};

xhr.open(item.method, item.url, true);

xhr.withCredentials = item.withCredentials;

angular.forEach(item.headers, function(value, name) {

xhr.setRequestHeader(name, value);

});

xhr.send(form);

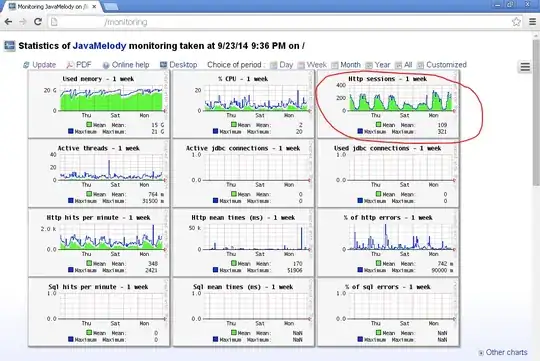

Looking at the network monitor in Opera's developer tools, I see that this kinda works and I get 3 files "in progress" at all times:

However, if I look the way the requests are progressing, I see that 2 of the 3 uploads (here, the seemingly long-running ones) are being put in status "Pending" and only 1 of the 3 requests is truly active at a time. This gets reflected in the upload times as well, since no time improvement appears to happen due to this parallelism.

I have placed console logs all over my code and it seems like this is not a problem with my code.

Are there any browser limitations to uploading files in parallel that I should know about? As far as I know, the AJAX limitations are quite higher in number of requests than what I use here... Is adding a file to the request changing things?