SynchronousQueue is a very special kind of queue - it implements a rendezvous approach (producer waits until consumer is ready, consumer waits until producer is ready) behind the interface of Queue.

Therefore you may need it only in the special cases when you need that particular semantics, for example, Single threading a task without queuing further requests.

Another reason for using SynchronousQueue is performance. Implementation of SynchronousQueue seems to be heavily optimized, so if you don't need anything more than a rendezvous point (as in the case of Executors.newCachedThreadPool(), where consumers are created "on-demand", so that queue items don't accumulate), you can get a performance gain by using SynchronousQueue.

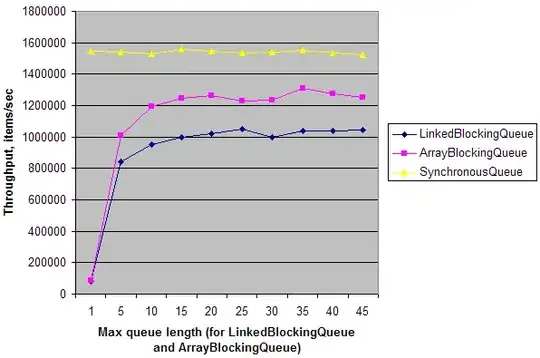

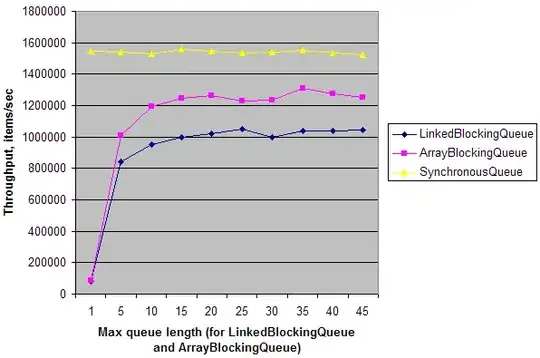

Simple synthetic test shows that in a simple single producer - single consumer scenario on dual-core machine throughput of SynchronousQueue is ~20 time higher that throughput of LinkedBlockingQueue and ArrayBlockingQueue with queue length = 1. When queue length is increased, their throughput rises and almost reaches throughput of SynchronousQueue. It means that SynchronousQueue has low synchronization overhead on multi-core machines compared to other queues. But again, it matters only in specific circumstances when you need a rendezvous point disguised as Queue.

EDIT:

Here is a test:

public class Test {

static ExecutorService e = Executors.newFixedThreadPool(2);

static int N = 1000000;

public static void main(String[] args) throws Exception {

for (int i = 0; i < 10; i++) {

int length = (i == 0) ? 1 : i * 5;

System.out.print(length + "\t");

System.out.print(doTest(new LinkedBlockingQueue<Integer>(length), N) + "\t");

System.out.print(doTest(new ArrayBlockingQueue<Integer>(length), N) + "\t");

System.out.print(doTest(new SynchronousQueue<Integer>(), N));

System.out.println();

}

e.shutdown();

}

private static long doTest(final BlockingQueue<Integer> q, final int n) throws Exception {

long t = System.nanoTime();

e.submit(new Runnable() {

public void run() {

for (int i = 0; i < n; i++)

try { q.put(i); } catch (InterruptedException ex) {}

}

});

Long r = e.submit(new Callable<Long>() {

public Long call() {

long sum = 0;

for (int i = 0; i < n; i++)

try { sum += q.take(); } catch (InterruptedException ex) {}

return sum;

}

}).get();

t = System.nanoTime() - t;

return (long)(1000000000.0 * N / t); // Throughput, items/sec

}

}

And here is a result on my machine: