I'm trying to identify car contours at night in a video (Video Link is the link and you can download it from HERE). I know that object detection based on R-CNN or YOLO can do this job. However, I want something more simple and more faster beacause all I want is to identify moving cars in real-time. (And I don't have a decent GPU.) I can do it pretty well in the day time using the background subtruction method to find the contours of cars:

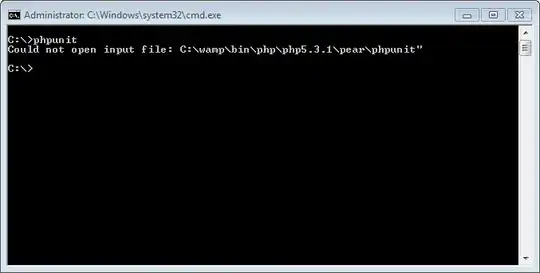

Because the light condition in the day time is rather stable, the big contours in the foremask are almost all cars. By setting a threshold of the contours' size, I can easily get the cars' contours. However, things are much different and complicated at night mainly because of the lights of cars. See pictures below:

The lights on the ground also have high contrast to the background so they are also contours in the foreground mask. In order to drop those lights, I'm trying to find the differences between light contours and car contours. By far, I've extracted the contour's area, centroid, perimeter, convexity, hight and width of bounding rectangle as features for evaluation. Here is the code:

import cv2

import numpy as np

import random

random.seed(100)

# ===============================================

# get video

video = "night_save.avi"

cap = cv2.VideoCapture(video)

# fg bg subtract model (MOG2)

fgbg = cv2.createBackgroundSubtractorMOG2(history=500, detectShadows=True) # filter model detec gery shadows for removing

# for writing video:

fourcc = cv2.VideoWriter_fourcc(*'XVID')

out = cv2.VideoWriter('night_output.avi',fourcc,20.0,(704,576))

#==============================================

frameID = 0

contours_info = []

# main loop:

while True:

#============================================

ret, frame = cap.read()

if ret:

#====================== get and filter foreground mask ================

original_frame = frame.copy()

fgmask = fgbg.apply(frame)

#==================================================================

# filter kernel for denoising:

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (2, 2))

# Fill any small holes

closing = cv2.morphologyEx(fgmask, cv2.MORPH_CLOSE, kernel)

# Remove noise

opening = cv2.morphologyEx(closing, cv2.MORPH_OPEN, kernel)

# Dilate to merge adjacent blobs

dilation = cv2.dilate(opening, kernel, iterations = 2)

# threshold (remove grey shadows)

dilation[dilation < 240] = 0

#=========================== contours ======================

im, contours, hierarchy = cv2.findContours(dilation, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# extract every contour and its information:

for cID, contour in enumerate(contours):

M = cv2.moments(contour)

# neglect small contours:

if M['m00'] < 400:

continue

# centroid

c_centroid = int(M['m10']/M['m00']), int(M['m01']/M['m00'])

# area

c_area = M['m00']

# perimeter

try:

c_perimeter = cv2.arcLength(contour, True)

except:

c_perimeter = cv2.arcLength(contour, False)

# convexity

c_convexity = cv2.isContourConvex(contour)

# boundingRect

(x, y, w, h) = cv2.boundingRect(contour)

# br centroid

br_centroid = (x + int(w/2), y + int(h/2))

# draw rect for each contour:

cv2.rectangle(original_frame,(x,y),(x+w,y+h),(0,255,0),2)

# draw id:

cv2.putText(original_frame, str(cID), (x+w,y+h), cv2.FONT_HERSHEY_PLAIN, 3, (127, 255, 255), 1)

# save contour info

contours_info.append([cID,frameID,c_centroid,br_centroid,c_area,c_perimeter,c_convexity,w,h])

#======================= show processed frame img ============================

cv2.imshow('fg',dilation)

cv2.imshow('origin',original_frame)

# save frame image:

cv2.imwrite('pics/{}.png'.format(str(frameID)), original_frame)

cv2.imwrite('pics/fb-{}.png'.format(str(frameID)), dilation)

frameID += 1

k = cv2.waitKey(30) & 0xff

if k == 27:

cap.release()

cv2.destroyAllWindows()

break

else:

break

#==========================save contour_info=========================

import pandas as pd

pd = pd.DataFrame(contours_info, columns = ['ID','frame','c_centroid','br_centroid','area','perimeter','convexity','width','height'])

pd.to_csv('contours.csv')

However, I don't see much difference in the features I extracted between lights and cars. Some large lights on the ground can be differed with area and preimeter but it's still hard to differ small lights. Could somebody give me some instructions on this? Maybe some more valuable features or another different method?

Edit:

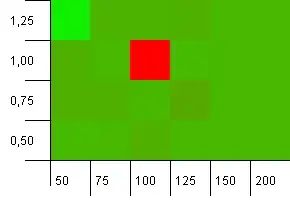

Thank for @ZdaR's advice. It makes me consider of using cv2.cvtColor to switch the frame image into another colorspace. The reason for doing that is to make the color difference between headlight itself and light on the ground more obvious so we can detect headlights more precisely. See the differece after switching colourspace:

ORIGIN(the colour of light on the ground is similar to car light itself):

AFTER SWITCHING(one becomes blue and the other becomes red):

So what I'm doing now is

1.Switch the colorspace;

2.Filter the swithced frame with certain colour filter (filter out blues, yellows and keep the red in order to keep the car headlights only.)

3.Feed the flitered frame to background subtruction model and get the foreground mask then dilation.

Here is the code for doing that:

ret, frame = cap.read()

if ret:

#====================== switch and filter ================

col_switch = cv2.cvtColor(frame, 70)

lower = np.array([0,0,0])

upper = np.array([40,10,255])

mask = cv2.inRange(col_switch, lower, upper)

res = cv2.bitwise_and(col_switch,col_switch, mask= mask)

#======================== get foreground mask=====================

fgmask = fgbg.apply(res)

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (2, 2))

# Dilate to merge adjacent blobs

d_kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (3, 3))

dilation = cv2.dilate(fgmask, d_kernel, iterations = 2)

dilation[dilation < 255] = 0

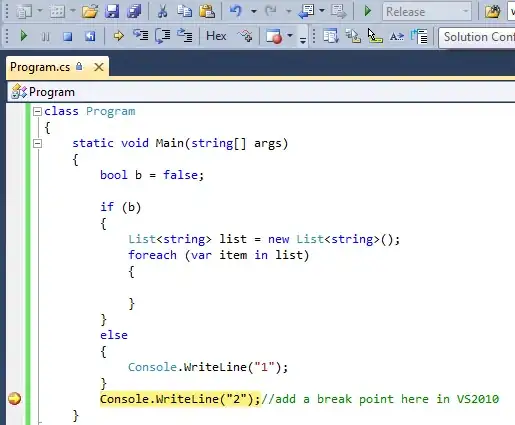

And I can get this foreground mask with headlights (and some noise too):

Based on this step, I can detect the cars' headlights pretty accurately and drop out the light on the ground:

However, I still don't know how to identify the car based on these headlights.